共计 41125 个字符,预计需要花费 103 分钟才能阅读完成。

引言

转眼间,Hadoop 的 stable 版本已经升级到 2.4.1 了,社区的力量真是强大!3.0 啥时候 release 呢?

今天做了个调研,尝鲜了一下 2.4.1 版本的分布式部署,包括 NN HA(目前已经部署好了 2.2.0 的 NN HA,ZK 和 ZKFC 用现成的),顺便也结合官方文档 http://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-common/ClusterSetup.html 梳理、补全了关键的配置文件属性,将同类属性归类,方便以后阅读修改,及作为模板使用。

下面记录参照官方文档及过去经验部署 2.4.1 的过程。

——————————————————————————–

注意

1. 本文只记录配置文件,不记录其余部署过程,其余过程和 2.2.0 相同,参见

http://www.linuxidc.com/Linux/2014-09/106289.htm

http://www.linuxidc.com/Linux/2014-09/106292.htm

2. 配置中所有的路径、IP、hostname 均需根据实际情况修改。

——————————————————————————–

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

搭建 Hadoop 环境(在 Winodws 环境下用虚拟机虚拟两个 Ubuntu 系统进行搭建)http://www.linuxidc.com/Linux/2011-12/48894.htm

——————————————————————————–

1. 实验环境:

4 节点集群,ZK 节点 3 个,hosts 文件和各节点角色分配如下:

hosts:

192.168.66.91 master

192.168.66.92 slave1

192.168.66.93 slave2

192.168.66.94 slave3

角色分配:

Active NN Standby NN DN JournalNode Zookeeper FailoverController

master V V V V

slave1 V V V V V

slave2 V V V

slave3 V

——————————————————————————–

2.hadoop-env.sh 修改以下三处即可

# The java implementation to use.

export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_07

# The directory where pid files are stored. /tmp by default.

# NOTE: this should be set to a directory that can only be written to by the user that will run the hadoop daemons. Otherwise there is the potential for a symlink attack.

export HADOOP_PID_DIR=/home/yarn/Hadoop/hadoop-2.4.1/hadoop_pid_dir

export HADOOP_SECURE_DN_PID_DIR=/home/yarn/Hadoop/hadoop-2.4.1/hadoop_pid_dir

——————————————————————————–

3.core-site.xml 完整文件

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-09/configuration.xsl”?>

<!– Licensed under the Apache License, Version 2.0 (the “License”); you

may not use this file except in compliance with the License. You may obtain

a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless

required by applicable law or agreed to in writing, software distributed

under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES

OR CONDITIONS OF ANY KIND, either express or implied. See the License for

the specific language governing permissions and limitations under the License.

See accompanying LICENSE file. –>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://myhadoop</value>

<description>NameNode UR,格式是 hdfs://host:port/,如果开启了 NN

HA 特性,则配置集群的逻辑名,具体参见我的 http://www.linuxidc.com/Linux/2014-09/106292.htm

</description>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

<description>Size of read/write buffer used in SequenceFiles.

</description>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

<description> 注意,配置了 ZK 以后,在格式化、启动 NameNode 之前必须先启动 ZK,否则会报连接错误

</description>

</property>

</configuration>

——————————————————————————–

4.hdfs-site.xml 完整文件

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-09/configuration.xsl”?>

<!– Licensed under the Apache License, Version 2.0 (the “License”); you

may not use this file except in compliance with the License. You may obtain

a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless

required by applicable law or agreed to in writing, software distributed

under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES

OR CONDITIONS OF ANY KIND, either express or implied. See the License for

the specific language governing permissions and limitations under the License.

See accompanying LICENSE file. –>

<!– Put site-specific property overrides in this file. –>

<configuration>

<!– NN HA related configuration **BEGIN** –>

<property>

<name>dfs.nameservices</name>

<value>myhadoop</value>

<description>

Comma-separated list of nameservices.

as same as fs.defaultFS in core-site.xml.

</description>

</property>

<property>

<name>dfs.ha.namenodes.myhadoop</name>

<value>nn1,nn2</value>

<description>

The prefix for a given nameservice, contains a comma-separated

list of namenodes for a given nameservice (eg EXAMPLENAMESERVICE).

</description>

</property>

<property>

<name>dfs.namenode.rpc-address.myhadoop.nn1</name>

<value>master:8020</value>

<description>

RPC address for nomenode1 of hadoop-test

</description>

</property>

<property>

<name>dfs.namenode.rpc-address.myhadoop.nn2</name>

<value>slave1:8020</value>

<description>

RPC address for nomenode2 of hadoop-test

</description>

</property>

<property>

<name>dfs.namenode.http-address.myhadoop.nn1</name>

<value>master:50070</value>

<description>

The address and the base port where the dfs namenode1 web ui will listen

on.

</description>

</property>

<property>

<name>dfs.namenode.http-address.myhadoop.nn2</name>

<value>slave1:50070</value>

<description>

The address and the base port where the dfs namenode2 web ui will listen

on.

</description>

</property>

<property>

<name>dfs.namenode.servicerpc-address.myhadoop.n1</name>

<value>master:53310</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.myhadoop.n2</name>

<value>slave1:53310</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

<description>

Whether automatic failover is enabled. See the HDFS High

Availability documentation for details on automatic HA

configuration.

</description>

</property>

<property>

<name>dfs.client.failover.proxy.provider.myhadoop</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

</value>

<description>Configure the name of the Java class which will be used

by the DFS Client to determine which NameNode is the current Active,

and therefore which NameNode is currently serving client requests.

这个类是 Client 的访问代理,是 HA 特性对于 Client 透明的关键!

</description>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

<description>how to communicate in the switch process</description>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/yarn/.ssh/id_rsa</value>

<description>the location stored ssh key</description>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>1000</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/hdfs_dir/journal/</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master:8485;slave1:8485;slave2:8485/hadoop-journal

</value>

<description>A directory on shared storage between the multiple

namenodes

in an HA cluster. This directory will be written by the active and read

by the standby in order to keep the namespaces synchronized. This

directory

does not need to be listed in dfs.namenode.edits.dir above. It should be

left empty in a non-HA cluster.

</description>

</property>

<!– NN HA related configuration **END** –>

<!– NameNode related configuration **BEGIN** –>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/yarn/Hadoop/hadoop-2.4.1/hdfs_dir/name</value>

<description>Path on the local filesystem where the NameNode stores

the namespace and transactions logs persistently.If this is a

comma-delimited list of directories then the name table is replicated

in all of the directories, for redundancy.</description>

</property>

<property>

<name>dfs.blocksize</name>

<value>1048576</value>

<description>

HDFS blocksize of 128MB for large file-systems.

Minimum block size is 1048576.

</description>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>10</value>

<description>More NameNode server threads to handle RPCs from large

number of DataNodes.</description>

</property>

<!– <property> <name>dfs.namenode.hosts</name> <value>master</value> <description>If

necessary, use this to control the list of allowable datanodes.</description>

</property> <property> <name>dfs.namenode.hosts.exclude</name> <value>slave1,slave2,slave3</value>

<description>If necessary, use this to control the list of exclude datanodes.</description>

</property> –>

<!– NameNode related configuration **END** –>

<!– DataNode related configuration **BEGIN** –>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///home/yarn/Hadoop/hadoop-2.4.1/hdfs_dir/data</value>

<description>Comma separated list of paths on the local filesystem of

a DataNode where it should store its blocks.If this is a

comma-delimited list of directories, then data will be stored in all

named directories, typically on different devices.</description>

</property>

<!– DataNode related configuration **END** –>

</configuration>

——————————————————————————–

5.yarn-site.xml

<?xml version=”1.0″?>

<!– Licensed under the Apache License, Version 2.0 (the “License”); you

may not use this file except in compliance with the License. You may obtain

a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless

required by applicable law or agreed to in writing, software distributed

under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES

OR CONDITIONS OF ANY KIND, either express or implied. See the License for

the specific language governing permissions and limitations under the License.

See accompanying LICENSE file. –>

<configuration>

<!– ResourceManager and NodeManager related configuration ***BEGIN*** –>

<property>

<name>yarn.acl.enable</name>

<value>false</value>

<description>Enable ACLs? Defaults to false.</description>

</property>

<property>

<name>yarn.admin.acl</name>

<value>*</value>

<description>

ACL to set admins on the cluster. ACLs are of for comma-separated-usersspace comma-separated-groups.

Defaults to special value of * which means anyone. Special value of just space means no one has access.

</description>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>false</value>

<description>Configuration to enable or disable log aggregation</description>

</property>

<!– ResourceManager and NodeManager related configuration ***END*** –>

<!– ResourceManager related configuration ***BEGIN*** –>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

<description>The hostname of the RM.</description>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

<description>The https adddress of the RM web application.</description>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

<description>ResourceManager host:port for clients to submit jobs.</description>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

<description>ResourceManager host:port for ApplicationMasters to talk to Scheduler to obtain resources.</description>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

<description>ResourceManager host:port for NodeManagers.</description>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

<description>ResourceManager host:port for administrative commands.</description>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

<description>ResourceManager web-ui host:port.</description>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

<description>

ResourceManager Scheduler class.

CapacityScheduler (recommended), FairScheduler (also recommended), or FifoScheduler

</description>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

<description>

Minimum limit of memory to allocate to each container request at the Resource Manager.

In MBs

</description>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

<description>

Maximum limit of memory to allocate to each container request at the Resource Manager.

In MBs.

According to my configuration,yarn.scheduler.maximum-allocation-mb > yarn.nodemanager.resource.memory-mb

</description>

</property>

<!–

<property>

<name>yarn.resourcemanager.nodes.include-path</name>

<value></value>

<description>

List of permitted NodeManagers.

If necessary, use this to control the list of allowable NodeManagers.

</description>

</property>

<property>

<name>yarn.resourcemanager.nodes.exclude-path</name>

<value></value>

<description>

List of exclude NodeManagers.

If necessary, use this to control the list of exclude NodeManagers.

</description>

</property>

–>

<!– ResourceManager related configuration ***END*** –>

<!– NodeManager related configuration ***BEGIN*** –>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>1024</value>

<description>

Resource i.e. available physical memory, in MB, for given NodeManager.

Defines total available resources on the NodeManager to be made available to running containers.

</description>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

<description>

Ratio between virtual memory to physical memory when setting memory limits for containers.

Container allocations are expressed in terms of physical memory,

and virtual memory usage is allowed to exceed this allocation by this ratio.

</description>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/yarn_dir/local</value>

<description>

Comma-separated list of paths on the local filesystem where intermediate data is written.

Multiple paths help spread disk i/o.

</description>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/yarn_dir/log</value>

<description>

Comma-separated list of paths on the local filesystem where logs are written.

Multiple paths help spread disk i/o.

</description>

</property>

<property>

<name>yarn.nodemanager.log.retain-seconds</name>

<value>10800</value>

<description>

Default time (in seconds) to retain log files on the NodeManager.

***Only applicable if log-aggregation is disabled.

</description>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/yarn/log-aggregation</value>

<description>

HDFS directory where the application logs are moved on application completion.

Need to set appropriate permissions.

***Only applicable if log-aggregation is enabled.

</description>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir-suffix</name>

<value>logs</value>

<description>

Suffix appended to the remote log dir.

Logs will be aggregated to ${yarn.nodemanager.remote-app-log-dir}/${user}/${thisParam}.

***Only applicable if log-aggregation is enabled.

</description>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<description>Shuffle service that needs to be set for Map Reduce applications.</description>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>1</value>

<description>Number of CPU cores that can be allocated for containers.</description>

</property>

<!– NodeManager related configuration ***END*** –>

<!– History Server related configuration ***BEGIN*** –>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>-1</value>

<description>

How long to keep aggregation logs before deleting them.

-1 disables.

Be careful, set this too small and you will spam the name node.

</description>

</property>

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>-1</value>

<description>

Time between checks for aggregated log retention.

If set to 0 or a negative value then the value is computed as one-tenth of the aggregated log retention time.

Be careful, set this too small and you will spam the name node.

</description>

</property>

<!– History Server related configuration ***END*** –>

<property>

<name>yarn.scheduler.fair.allocation.file</name>

<value>${yarn.home.dir}/etc/hadoop/fairscheduler.xml</value>

<description>fairscheduler config file path</description>

<!– 官网文档居然找不到该属性!但该属性还是 work 的!–>

</property>

</configuration>

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2014-09/106291p2.htm

6. 创建 fairscheduler.xml

<?xml version=”1.0″?>

<allocations>

<!–

<queue name=”Hadooptest”>

<minResources>1024 mb, 1 vcores</minResources>

<maxResources>2048 mb, 2 vcores</maxResources>

<maxRunningApps>10</maxRunningApps>

<weight>2.0</weight>

<schedulingMode>fair</schedulingMode>

<aclAdministerApps> hadooptest</aclAdministerApps>

<aclSubmitApps> hadooptest</aclSubmitApps>

</queue>

<queue name=”hadoopdev”>

<minResources>1024 mb, 2 vcores</minResources>

<maxResources>2048 mb, 4 vcores</maxResources>

<maxRunningApps>20</maxRunningApps>

<weight>2.0</weight>

<schedulingMode>fair</schedulingMode>

<aclAdministerApps> hadoopdev</aclAdministerApps>

<aclSubmitApps> hadoopdev</aclSubmitApps>

</queue>

–>

<user name=”yarn”>

<maxRunningApps>30</maxRunningApps>

</user>

</allocations>

——————————————————————————–

7.mapred-site.xml

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-09/configuration.xsl”?>

<!– Licensed under the Apache License, Version 2.0 (the “License”); you

may not use this file except in compliance with the License. You may obtain

a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless

required by applicable law or agreed to in writing, software distributed

under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES

OR CONDITIONS OF ANY KIND, either express or implied. See the License for

the specific language governing permissions and limitations under the License.

See accompanying LICENSE file. –>

<!– Put site-specific property overrides in this file. –>

<configuration>

<!– MapReduce Applications related configuration ***BEGIN*** –>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<description>Execution framework set to Hadoop YARN.</description>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>1024</value>

<description>Larger resource limit for maps.</description>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1024M</value>

<description>Larger heap-size for child jvms of maps.</description>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>1024</value>

<description>Larger resource limit for reduces.</description>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx1024M</value>

<description>Larger heap-size for child jvms of reduces.</description>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>1024</value>

<description>Higher memory-limit while sorting data for efficiency.</description>

</property>

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>10</value>

<description>More streams merged at once while sorting files.</description>

</property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>20</value>

<description>Higher number of parallel copies run by reduces to fetch outputs from very large number of maps.</description>

</property>

<!– MapReduce Applications related configuration ***END*** –>

<!– MapReduce JobHistory Server related configuration ***BEGIN*** –>

<property>

<name>mapreduce.jobhistory.address</name>

<value>slave1:10020</value>

<description>MapReduce JobHistory Server host:port. Default port is 10020.</description>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>slave1:19888</value>

<description>MapReduce JobHistory Server Web UI host:port. Default port is 19888.</description>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/mr_history/tmp</value>

<description>Directory where history files are written by MapReduce jobs.</description>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/mr_history/done</value>

<description>Directory where history files are managed by the MR JobHistory Server.</description>

</property>

<!– MapReduce JobHistory Server related configuration ***END*** –>

</configuration>

——————————————————————————–

8.slaves

slave1

slave2

slave3

——————————————————————————–

配置文件就涉及以上这些,在一个节点修改好,然后:

scp 相关目录到各台机器

修改各台机器环境变量,添加新的 HADOOP_HOME,# 掉老的 HADOOP_HOME

——————————————————————————–

9. 启动集群

(1)启动 ZK

在所有的 ZK 节点执行命令:

zkServer.sh start

查看各个 ZK 的从属关系:

yarn@master:~$ zkServer.sh status

JMX enabled by default

Using config: /home/yarn/Zookeeper/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: follower

yarn@slave1:~$ zkServer.sh status

JMX enabled by default

Using config: /home/yarn/Zookeeper/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: follower

yarn@slave2:~$ zkServer.sh status

JMX enabled by default

Using config: /home/yarn/Zookeeper/zookeeper-3.4.6/bin/../conf/zoo.cfg

Mode: leader

注意:

哪个 ZK 节点会成为 leader 是随机的,第一次实验时 slave2 成为了 leader,第二次实验时 slave1 成为了 leader!

此时,在各个节点都可以查看到 ZK 进程:

yarn@master:~$ jps

3084 QuorumPeerMain

3212 Jps

(2)格式化 ZK(仅第一次需要做)

任意 ZK 节点上执行:

hdfs zkfc -formatZK

(3)启动 ZKFC

ZookeeperFailoverController 是用来监控 NN 状态,协助实现主备 NN 切换的,所以仅仅在主备 NN 节点上启动就行:

hadoop-daemon.sh start zkfc

启动后我们可以看到 ZKFC 进程:

yarn@master:~$ jps

3084 QuorumPeerMain

3292 Jps

3247 DFSZKFailoverController

(4)启动用于主备 NN 之间同步元数据信息的共享存储系统 JournalNode

参见角色分配表,在各个 JN 节点上启动:

hadoop-daemon.sh start journalnode

启动后在各个 JN 节点都可以看到 JournalNode 进程:

yarn@master:~$ jps

3084 QuorumPeerMain

3358 Jps

3325 JournalNode

3247 DFSZKFailoverController

(5)格式化并启动主 NN

格式化:

hdfs namenode -format

注意:只有第一次启动系统时需格式化,请勿重复格式化!

在主 NN 节点执行命令启动 NN:

hadoop-daemon.sh start namenode

启动后可以看到 NN 进程:

yarn@master:~$ jps

3084 QuorumPeerMain

3480 Jps

3325 JournalNode

3411 NameNode

3247 DFSZKFailoverController

(6)在备 NN 上同步主 NN 的元数据信息

hdfs namenode -bootstrapStandby

以下是正常执行时的最后部分日志:

Re-format filesystem in Storage Directory /home/yarn/Hadoop/hdfs2.0/name ? (Y or N) Y

14/06/15 10:09:08 INFO common.Storage: Storage directory /home/yarn/Hadoop/hdfs2.0/name has been successfully formatted.

14/06/15 10:09:09 INFO namenode.TransferFsImage: Opening connection to http://master:50070/getimage?getimage=1&txid=935&storageInfo=-47:564636372:0:CID-d899b10e-10c9-4851-b60d-3e158e322a62

14/06/15 10:09:09 INFO namenode.TransferFsImage: Transfer took 0.11s at 63.64 KB/s

14/06/15 10:09:09 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000935 size 7545 bytes.

14/06/15 10:09:09 INFO util.ExitUtil: Exiting with status 0

14/06/15 10:09:09 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at slave1/192.168.66.92

************************************************************/

(7)启动备 NN

在备 NN 上执行命令:

hadoop-daemon.sh start namenode

(8)设置主 NN(这一步可以省略,这是在设置手动切换 NN 时的步骤,ZK 已经自动选择一个节点作为主 NN 了)

到目前为止,其实 HDFS 还不知道谁是主 NN,可以通过监控页面查看,两个节点的 NN 都是 Standby 状态。

下面我们需要在主 NN 节点上执行命令激活主 NN:

hdfs haadmin -transitionToActive nn1

(9)在主 NN 上启动 Datanode

在 [nn1] 上,启动所有 datanode

hadoop-daemons.sh start datanode

(10)启动 yarn

在 ResourceManager 所在节点执行(yarn 启动真是方便!!!):

start-yarn.sh

(11)在运行 MRJS 的 slave1 上执行以下命令启动 MR JobHistory Server:

mr-jobhistory-daemon.sh start historyserver

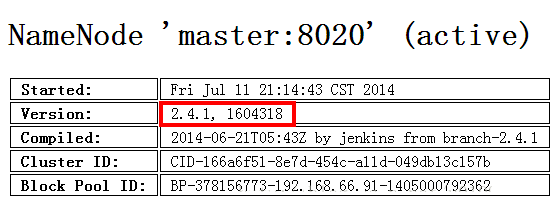

至此,启动完毕,可以看到 2.4.1 的界面了:

——————————————————————————–

10. 停止集群

在 RM 和 NN 所在节点 master 执行:

停止 yarn:

stop-yarn.sh

停止 hdfs:

stop-dfs.sh

停止 zookeeper:

zkServer.sh stop

在运行 JobHistoryServer 的 slave1 上执行:

停止 JobHistoryServer:

mr-jobhistory-daemon.sh stop historyserver

更多 Hadoop 相关信息见Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13

引言

转眼间,Hadoop 的 stable 版本已经升级到 2.4.1 了,社区的力量真是强大!3.0 啥时候 release 呢?

今天做了个调研,尝鲜了一下 2.4.1 版本的分布式部署,包括 NN HA(目前已经部署好了 2.2.0 的 NN HA,ZK 和 ZKFC 用现成的),顺便也结合官方文档 http://hadoop.apache.org/docs/r2.4.1/hadoop-project-dist/hadoop-common/ClusterSetup.html 梳理、补全了关键的配置文件属性,将同类属性归类,方便以后阅读修改,及作为模板使用。

下面记录参照官方文档及过去经验部署 2.4.1 的过程。

——————————————————————————–

注意

1. 本文只记录配置文件,不记录其余部署过程,其余过程和 2.2.0 相同,参见

http://www.linuxidc.com/Linux/2014-09/106289.htm

http://www.linuxidc.com/Linux/2014-09/106292.htm

2. 配置中所有的路径、IP、hostname 均需根据实际情况修改。

——————————————————————————–

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

搭建 Hadoop 环境(在 Winodws 环境下用虚拟机虚拟两个 Ubuntu 系统进行搭建)http://www.linuxidc.com/Linux/2011-12/48894.htm

——————————————————————————–

1. 实验环境:

4 节点集群,ZK 节点 3 个,hosts 文件和各节点角色分配如下:

hosts:

192.168.66.91 master

192.168.66.92 slave1

192.168.66.93 slave2

192.168.66.94 slave3

角色分配:

Active NN Standby NN DN JournalNode Zookeeper FailoverController

master V V V V

slave1 V V V V V

slave2 V V V

slave3 V

——————————————————————————–

2.hadoop-env.sh 修改以下三处即可

# The java implementation to use.

export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_07

# The directory where pid files are stored. /tmp by default.

# NOTE: this should be set to a directory that can only be written to by the user that will run the hadoop daemons. Otherwise there is the potential for a symlink attack.

export HADOOP_PID_DIR=/home/yarn/Hadoop/hadoop-2.4.1/hadoop_pid_dir

export HADOOP_SECURE_DN_PID_DIR=/home/yarn/Hadoop/hadoop-2.4.1/hadoop_pid_dir

——————————————————————————–

3.core-site.xml 完整文件

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-09/configuration.xsl”?>

<!– Licensed under the Apache License, Version 2.0 (the “License”); you

may not use this file except in compliance with the License. You may obtain

a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless

required by applicable law or agreed to in writing, software distributed

under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES

OR CONDITIONS OF ANY KIND, either express or implied. See the License for

the specific language governing permissions and limitations under the License.

See accompanying LICENSE file. –>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://myhadoop</value>

<description>NameNode UR,格式是 hdfs://host:port/,如果开启了 NN

HA 特性,则配置集群的逻辑名,具体参见我的 http://www.linuxidc.com/Linux/2014-09/106292.htm

</description>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

<description>Size of read/write buffer used in SequenceFiles.

</description>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

<description> 注意,配置了 ZK 以后,在格式化、启动 NameNode 之前必须先启动 ZK,否则会报连接错误

</description>

</property>

</configuration>

——————————————————————————–

4.hdfs-site.xml 完整文件

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-09/configuration.xsl”?>

<!– Licensed under the Apache License, Version 2.0 (the “License”); you

may not use this file except in compliance with the License. You may obtain

a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless

required by applicable law or agreed to in writing, software distributed

under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES

OR CONDITIONS OF ANY KIND, either express or implied. See the License for

the specific language governing permissions and limitations under the License.

See accompanying LICENSE file. –>

<!– Put site-specific property overrides in this file. –>

<configuration>

<!– NN HA related configuration **BEGIN** –>

<property>

<name>dfs.nameservices</name>

<value>myhadoop</value>

<description>

Comma-separated list of nameservices.

as same as fs.defaultFS in core-site.xml.

</description>

</property>

<property>

<name>dfs.ha.namenodes.myhadoop</name>

<value>nn1,nn2</value>

<description>

The prefix for a given nameservice, contains a comma-separated

list of namenodes for a given nameservice (eg EXAMPLENAMESERVICE).

</description>

</property>

<property>

<name>dfs.namenode.rpc-address.myhadoop.nn1</name>

<value>master:8020</value>

<description>

RPC address for nomenode1 of hadoop-test

</description>

</property>

<property>

<name>dfs.namenode.rpc-address.myhadoop.nn2</name>

<value>slave1:8020</value>

<description>

RPC address for nomenode2 of hadoop-test

</description>

</property>

<property>

<name>dfs.namenode.http-address.myhadoop.nn1</name>

<value>master:50070</value>

<description>

The address and the base port where the dfs namenode1 web ui will listen

on.

</description>

</property>

<property>

<name>dfs.namenode.http-address.myhadoop.nn2</name>

<value>slave1:50070</value>

<description>

The address and the base port where the dfs namenode2 web ui will listen

on.

</description>

</property>

<property>

<name>dfs.namenode.servicerpc-address.myhadoop.n1</name>

<value>master:53310</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.myhadoop.n2</name>

<value>slave1:53310</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

<description>

Whether automatic failover is enabled. See the HDFS High

Availability documentation for details on automatic HA

configuration.

</description>

</property>

<property>

<name>dfs.client.failover.proxy.provider.myhadoop</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

</value>

<description>Configure the name of the Java class which will be used

by the DFS Client to determine which NameNode is the current Active,

and therefore which NameNode is currently serving client requests.

这个类是 Client 的访问代理,是 HA 特性对于 Client 透明的关键!

</description>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

<description>how to communicate in the switch process</description>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/yarn/.ssh/id_rsa</value>

<description>the location stored ssh key</description>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>1000</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/hdfs_dir/journal/</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master:8485;slave1:8485;slave2:8485/hadoop-journal

</value>

<description>A directory on shared storage between the multiple

namenodes

in an HA cluster. This directory will be written by the active and read

by the standby in order to keep the namespaces synchronized. This

directory

does not need to be listed in dfs.namenode.edits.dir above. It should be

left empty in a non-HA cluster.

</description>

</property>

<!– NN HA related configuration **END** –>

<!– NameNode related configuration **BEGIN** –>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/yarn/Hadoop/hadoop-2.4.1/hdfs_dir/name</value>

<description>Path on the local filesystem where the NameNode stores

the namespace and transactions logs persistently.If this is a

comma-delimited list of directories then the name table is replicated

in all of the directories, for redundancy.</description>

</property>

<property>

<name>dfs.blocksize</name>

<value>1048576</value>

<description>

HDFS blocksize of 128MB for large file-systems.

Minimum block size is 1048576.

</description>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>10</value>

<description>More NameNode server threads to handle RPCs from large

number of DataNodes.</description>

</property>

<!– <property> <name>dfs.namenode.hosts</name> <value>master</value> <description>If

necessary, use this to control the list of allowable datanodes.</description>

</property> <property> <name>dfs.namenode.hosts.exclude</name> <value>slave1,slave2,slave3</value>

<description>If necessary, use this to control the list of exclude datanodes.</description>

</property> –>

<!– NameNode related configuration **END** –>

<!– DataNode related configuration **BEGIN** –>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///home/yarn/Hadoop/hadoop-2.4.1/hdfs_dir/data</value>

<description>Comma separated list of paths on the local filesystem of

a DataNode where it should store its blocks.If this is a

comma-delimited list of directories, then data will be stored in all

named directories, typically on different devices.</description>

</property>

<!– DataNode related configuration **END** –>

</configuration>

——————————————————————————–

5.yarn-site.xml

<?xml version=”1.0″?>

<!– Licensed under the Apache License, Version 2.0 (the “License”); you

may not use this file except in compliance with the License. You may obtain

a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless

required by applicable law or agreed to in writing, software distributed

under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES

OR CONDITIONS OF ANY KIND, either express or implied. See the License for

the specific language governing permissions and limitations under the License.

See accompanying LICENSE file. –>

<configuration>

<!– ResourceManager and NodeManager related configuration ***BEGIN*** –>

<property>

<name>yarn.acl.enable</name>

<value>false</value>

<description>Enable ACLs? Defaults to false.</description>

</property>

<property>

<name>yarn.admin.acl</name>

<value>*</value>

<description>

ACL to set admins on the cluster. ACLs are of for comma-separated-usersspace comma-separated-groups.

Defaults to special value of * which means anyone. Special value of just space means no one has access.

</description>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>false</value>

<description>Configuration to enable or disable log aggregation</description>

</property>

<!– ResourceManager and NodeManager related configuration ***END*** –>

<!– ResourceManager related configuration ***BEGIN*** –>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

<description>The hostname of the RM.</description>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

<description>The https adddress of the RM web application.</description>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

<description>ResourceManager host:port for clients to submit jobs.</description>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

<description>ResourceManager host:port for ApplicationMasters to talk to Scheduler to obtain resources.</description>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

<description>ResourceManager host:port for NodeManagers.</description>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

<description>ResourceManager host:port for administrative commands.</description>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

<description>ResourceManager web-ui host:port.</description>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

<description>

ResourceManager Scheduler class.

CapacityScheduler (recommended), FairScheduler (also recommended), or FifoScheduler

</description>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

<description>

Minimum limit of memory to allocate to each container request at the Resource Manager.

In MBs

</description>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

<description>

Maximum limit of memory to allocate to each container request at the Resource Manager.

In MBs.

According to my configuration,yarn.scheduler.maximum-allocation-mb > yarn.nodemanager.resource.memory-mb

</description>

</property>

<!–

<property>

<name>yarn.resourcemanager.nodes.include-path</name>

<value></value>

<description>

List of permitted NodeManagers.

If necessary, use this to control the list of allowable NodeManagers.

</description>

</property>

<property>

<name>yarn.resourcemanager.nodes.exclude-path</name>

<value></value>

<description>

List of exclude NodeManagers.

If necessary, use this to control the list of exclude NodeManagers.

</description>

</property>

–>

<!– ResourceManager related configuration ***END*** –>

<!– NodeManager related configuration ***BEGIN*** –>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>1024</value>

<description>

Resource i.e. available physical memory, in MB, for given NodeManager.

Defines total available resources on the NodeManager to be made available to running containers.

</description>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

<description>

Ratio between virtual memory to physical memory when setting memory limits for containers.

Container allocations are expressed in terms of physical memory,

and virtual memory usage is allowed to exceed this allocation by this ratio.

</description>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/yarn_dir/local</value>

<description>

Comma-separated list of paths on the local filesystem where intermediate data is written.

Multiple paths help spread disk i/o.

</description>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/home/yarn/Hadoop/hadoop-2.4.1/yarn_dir/log</value>

<description>

Comma-separated list of paths on the local filesystem where logs are written.

Multiple paths help spread disk i/o.

</description>

</property>

<property>

<name>yarn.nodemanager.log.retain-seconds</name>

<value>10800</value>

<description>

Default time (in seconds) to retain log files on the NodeManager.

***Only applicable if log-aggregation is disabled.

</description>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/yarn/log-aggregation</value>

<description>

HDFS directory where the application logs are moved on application completion.

Need to set appropriate permissions.

***Only applicable if log-aggregation is enabled.

</description>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir-suffix</name>

<value>logs</value>

<description>

Suffix appended to the remote log dir.

Logs will be aggregated to ${yarn.nodemanager.remote-app-log-dir}/${user}/${thisParam}.

***Only applicable if log-aggregation is enabled.

</description>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<description>Shuffle service that needs to be set for Map Reduce applications.</description>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>1</value>

<description>Number of CPU cores that can be allocated for containers.</description>

</property>

<!– NodeManager related configuration ***END*** –>

<!– History Server related configuration ***BEGIN*** –>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>-1</value>

<description>

How long to keep aggregation logs before deleting them.

-1 disables.

Be careful, set this too small and you will spam the name node.

</description>

</property>

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>-1</value>

<description>

Time between checks for aggregated log retention.

If set to 0 or a negative value then the value is computed as one-tenth of the aggregated log retention time.

Be careful, set this too small and you will spam the name node.

</description>

</property>

<!– History Server related configuration ***END*** –>

<property>

<name>yarn.scheduler.fair.allocation.file</name>

<value>${yarn.home.dir}/etc/hadoop/fairscheduler.xml</value>

<description>fairscheduler config file path</description>

<!– 官网文档居然找不到该属性!但该属性还是 work 的!–>

</property>

</configuration>

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2014-09/106291p2.htm