共计 4926 个字符,预计需要花费 13 分钟才能阅读完成。

由于 spark-1.3 作为一个里程碑式的发布, 加入众多的功能特性, 所以, 有必要好好的研究一把,spark-1.3 需要 scala-2.10.x 的版本支持, 而系统上默认的 scala 的版本为 2.9, 需要进行升级, 可以参考 Ubuntu 安装 2.10.x 版本的 scala,见 http://www.linuxidc.com/Linux/2015-04/116455.htm . 配置好 scala 的环境后, 下载 spark 的 cdh 版本, 点我下载.

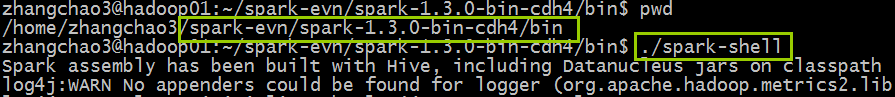

下载好后, 直接解压, 然后在 bin 目录直接运行./spark-shell 即可:

日志如下:

www.linuxidc.com@Hadoop01:~/spark-evn/spark-1.3.0-bin-cdh4/bin$ ./spark-shell

Spark assembly has been built with Hive, including Datanucleus jars on classpath

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark’s default log4j profile: org/apache/spark/log4j-defaults.properties

15/04/14 00:03:30 INFO SecurityManager: Changing view acls to: zhangchao3

15/04/14 00:03:30 INFO SecurityManager: Changing modify acls to: zhangchao3

15/04/14 00:03:30 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(zhangchao3); users with modify permissions: Set(zhangchao3)

15/04/14 00:03:30 INFO HttpServer: Starting HTTP Server

15/04/14 00:03:30 INFO Server: jetty-8.y.z-SNAPSHOT

15/04/14 00:03:30 INFO AbstractConnector: Started SocketConnector@0.0.0.0:45918

15/04/14 00:03:30 INFO Utils: Successfully started service ‘HTTP class server’ on port 45918.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ ‘_/

/___/ .__/\_,_/_/ /_/\_\ version 1.3.0

/_/

Using Scala version 2.10.4 (OpenJDK 64-Bit Server VM, Java 1.7.0_75)

Type in expressions to have them evaluated.

Type :help for more information.

15/04/14 00:03:33 WARN Utils: Your hostname, hadoop01 resolves to a loopback address: 127.0.1.1; using 172.18.147.71 instead (on interface em1)

15/04/14 00:03:33 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

15/04/14 00:03:33 INFO SparkContext: Running Spark version 1.3.0

15/04/14 00:03:33 INFO SecurityManager: Changing view acls to: zhangchao3

15/04/14 00:03:33 INFO SecurityManager: Changing modify acls to: zhangchao3

15/04/14 00:03:33 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(zhangchao3); users with modify permissions: Set(zhangchao3)

15/04/14 00:03:33 INFO Slf4jLogger: Slf4jLogger started

15/04/14 00:03:33 INFO Remoting: Starting remoting

15/04/14 00:03:33 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@172.18.147.71:51629]

15/04/14 00:03:33 INFO Utils: Successfully started service ‘sparkDriver’ on port 51629.

15/04/14 00:03:33 INFO SparkEnv: Registering MapOutputTracker

15/04/14 00:03:33 INFO SparkEnv: Registering BlockManagerMaster

15/04/14 00:03:33 INFO DiskBlockManager: Created local directory at /tmp/spark-d398c8f3-6345-41f9-a712-36cad4a45e67/blockmgr-255070a6-19a9-49a5-a117-e4e8733c250a

15/04/14 00:03:33 INFO MemoryStore: MemoryStore started with capacity 265.4 MB

15/04/14 00:03:33 INFO HttpFileServer: HTTP File server directory is /tmp/spark-296eb142-92fc-46e9-bea8-f6065aa8f49d/httpd-4d6e4295-dd96-48bc-84b8-c26815a9364f

15/04/14 00:03:33 INFO HttpServer: Starting HTTP Server

15/04/14 00:03:33 INFO Server: jetty-8.y.z-SNAPSHOT

15/04/14 00:03:33 INFO AbstractConnector: Started SocketConnector@0.0.0.0:56529

15/04/14 00:03:33 INFO Utils: Successfully started service ‘HTTP file server’ on port 56529.

15/04/14 00:03:33 INFO SparkEnv: Registering OutputCommitCoordinator

15/04/14 00:03:33 INFO Server: jetty-8.y.z-SNAPSHOT

15/04/14 00:03:33 INFO AbstractConnector: Started SelectChannelConnector@0.0.0.0:4040

15/04/14 00:03:33 INFO Utils: Successfully started service ‘SparkUI’ on port 4040.

15/04/14 00:03:33 INFO SparkUI: Started SparkUI at http://172.18.147.71:4040

15/04/14 00:03:33 INFO Executor: Starting executor ID <driver> on host localhost

15/04/14 00:03:33 INFO Executor: Using REPL class URI: http://172.18.147.71:45918

15/04/14 00:03:33 INFO AkkaUtils: Connecting to HeartbeatReceiver: akka.tcp://sparkDriver@172.18.147.71:51629/user/HeartbeatReceiver

15/04/14 00:03:33 INFO NettyBlockTransferService: Server created on 55429

15/04/14 00:03:33 INFO BlockManagerMaster: Trying to register BlockManager

15/04/14 00:03:33 INFO BlockManagerMasterActor: Registering block manager localhost:55429 with 265.4 MB RAM, BlockManagerId(<driver>, localhost, 55429)

15/04/14 00:03:33 INFO BlockManagerMaster: Registered BlockManager

15/04/14 00:03:34 INFO SparkILoop: Created spark context..

Spark context available as sc.

15/04/14 00:03:34 INFO SparkILoop: Created sql context (with Hive support)..

SQL context available as sqlContext.

scala>

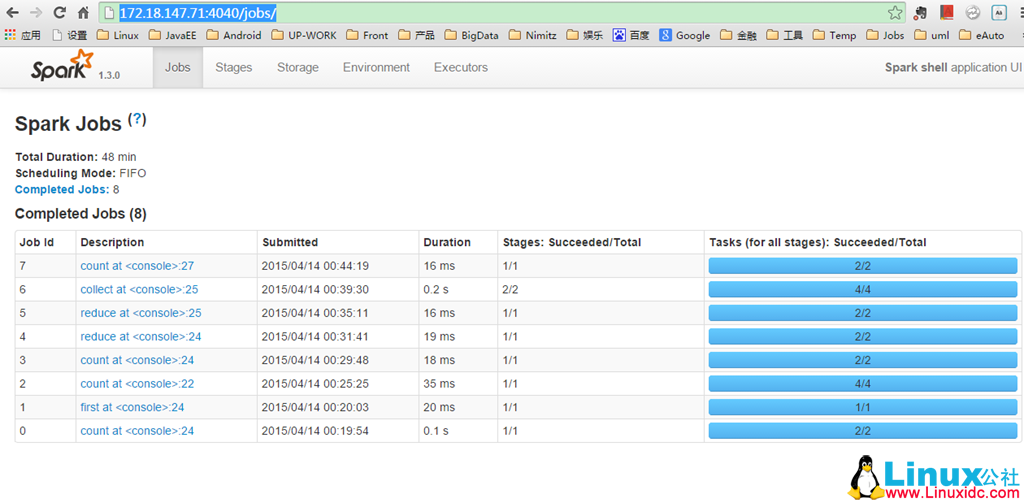

http://172.18.147.71:4040/jobs/,可以看到 spark 运行情况:

————————————– 分割线 ————————————–

Spark1.0.0 部署指南 http://www.linuxidc.com/Linux/2014-07/104304.htm

CentOS 6.2(64 位) 下安装 Spark0.8.0 详细记录 http://www.linuxidc.com/Linux/2014-06/102583.htm

Spark 简介及其在 Ubuntu 下的安装使用 http://www.linuxidc.com/Linux/2013-08/88606.htm

安装 Spark 集群 (在 CentOS 上) http://www.linuxidc.com/Linux/2013-08/88599.htm

Hadoop vs Spark 性能对比 http://www.linuxidc.com/Linux/2013-08/88597.htm

Spark 安装与学习 http://www.linuxidc.com/Linux/2013-08/88596.htm

Spark 并行计算模型 http://www.linuxidc.com/Linux/2012-12/76490.htm

————————————– 分割线 ————————————–

Spark 的详细介绍 :请点这里

Spark 的下载地址 :请点这里

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2015-04/116458.htm