共计 46797 个字符,预计需要花费 117 分钟才能阅读完成。

本文介绍安装 Hadoop 2.2.0 single node。

首先准备一个虚拟机,Ubuntu 12.04.4

Java 环境:

root@hm1:~# mvn –version

Apache Maven 3.1.1 (0728685237757ffbf44136acec0402957f723d9a; 2013-09-17 15:22:22+0000)

Maven home: /usr/apache-maven-3.1.1

Java version: 1.7.0_51, vendor: Oracle Corporation

Java home: /usr/lib/jvm/java-7-oracle/jre

Default locale: en_US, platform encoding: UTF-8

OS name: “linux”, version: “3.2.0-59-virtual”, arch: “amd64”, family: “unix”

创建 hadoop 的用户以及组,组 hadoop, 用户名 hduser, 密码 hduser

root@hm1:~# addgroup hadoop

Adding group `hadoop’ (GID 1001) …

Done.

root@hm1:~# adduser –ingroup hadoop hduser

Adding user `hduser’ …

Adding new user `hduser’ (1001) with group `hadoop’ …

Creating home directory `/home/hduser’ …

Copying files from `/etc/skel’ …

Enter new UNIX password:

Retype new UNIX password:

passwd: password updated successfully

Changing the user information for hduser

Enter the new value, or press ENTER for the default

Full Name []:

Room Number []:

Work Phone []:

Home Phone []:

Other []:

Is the information correct? [Y/n] y

添加到 sudo 组中

root@hm1:~# adduser hduser sudo

Adding user `hduser’ to group `sudo’ …

Adding user hduser to group sudo

Done.

为了防止以后用 hduser 使用 sudo 时候遇到如下错误:

hduser is not in the sudoers file. This incident will be reported.

需要用 visudo 命令编辑文件 /etc/sudoers, 添加一行

# Uncomment to allow members of group sudo to not need a password

# %sudo ALL=NOPASSWD: ALL

hduser ALL=(ALL) ALL

退出 root 用户,用 hduser 登录。

ssh hduser@192.168.1.70

为了避免安装脚本提示认证,下面的命令将建立 localhost 访问的证书文件

hduser@hm1:~$ ssh-keygen -t rsa -P ”

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hduser/.ssh/id_rsa):

Created directory ‘/home/hduser/.ssh’.

Your identification has been saved in /home/hduser/.ssh/id_rsa.

Your public key has been saved in /home/hduser/.ssh/id_rsa.pub.

The key fingerprint is:

b8:b6:3d:c2:24:1f:7b:a3:00:88:72:86:76:5a:d8:c2 hduser@hm1

The key’s randomart image is:

+–[RSA 2048]—-+

| |

| |

| |

|ooo . |

|=E++ . S |

|oo=.. o. |

| . .=oo |

| o=o+ |

| o+.o |

+—————–+

hduser@hm1:~$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

hduser@hm1:~$ ssh localhost

The authenticity of host ‘localhost (127.0.0.1)’ can’t be established.

ECDSA key fingerprint is fb:a8:6c:4c:51:57:b2:6d:36:b2:9c:62:94:30:40:a7.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘localhost’ (ECDSA) to the list of known hosts.

Welcome to Ubuntu 12.04.4 LTS (GNU/Linux 3.2.0-59-virtual x86_64)

* Documentation: https://help.ubuntu.com/

Last login: Fri Feb 21 07:59:05 2014 from 192.168.1.5

ssh localhost 如果没有遇到询问密码,就说明上面的设置成功了。

现在下载 hadoop, 下载网址:http://apache.mirrors.lucidnetworks.net/hadoop/common/

现在运行下面的命令下载和修改文件权限

$ cd ~

$ wget http://www.trieuvan.com/apache/hadoop/common/hadoop-2.2.0/hadoop-2.2.0.tar.gz

$ sudo tar vxzf hadoop-2.2.0.tar.gz -C /usr/local

$ cd /usr/local

$ sudo mv hadoop-2.2.0 hadoop

$ sudo chown -R hduser:hadoop hadoop

相关阅读:

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

搭建 Hadoop 环境(在 Winodws 环境下用虚拟机虚拟两个 Ubuntu 系统进行搭建)http://www.linuxidc.com/Linux/2011-12/48894.htm

假定已经用 hduser 登录,现在开始设置环境变量, 将下面的内容添加到~/.bashrc,

#Hadoop variables

export JAVA_HOME=/usr/lib/jvm/java-7-Oracle/

export HADOOP_INSTALL=/usr/local/hadoop

export PATH=$PATH:$HADOOP_INSTALL/bin

export PATH=$PATH:$HADOOP_INSTALL/sbin

export HADOOP_MAPRED_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_HOME=$HADOOP_INSTALL

export HADOOP_HDFS_HOME=$HADOOP_INSTALL

export YARN_HOME=$HADOOP_INSTALL

###end of paste

继续修改文件 /usr/local/hadoop/etc/hadoop/hadoop-env.sh 里面的 JAVA_HOME

# The java implementation to use.

export JAVA_HOME=/usr/lib/jvm/java-7-oracle/

退出后,重新用 hduser 登录,然后执行命令检查:

hduser@hm1:~$ hadoop version

Hadoop 2.2.0

Subversion https://svn.apache.org/repos/asf/hadoop/common -r 1529768

Compiled by hortonmu on 2013-10-07T06:28Z

Compiled with protoc 2.5.0

From source with checksum 79e53ce7994d1628b240f09af91e1af4

This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar

现在 hadoop 已经安装好了。下面为启动进行配置。

在文件 /usr/local/hadoop/etc/hadoop/core-site.xml 文件中将 <configuration></configuration> 中添加配置。

仍使用 hadoop 1.0 的 hdfs 工作模式

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

添加下面的配置到文件 /usr/local/hadoop/etc/hadoop/yarn-site.xml

制定启动时 node manager 的 aux service 使用 shuffle server.

<configuration>

<!– Site specific YARN configuration properties –>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce.shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

修改 /usr/local/hadoop/etc/hadoop/mapred-site.xml 文件

cp mapred-site.xml.template mapred-site.xml

指定 yarn 为采用的 map reduce 框架名称

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

创建 hdfs 保存数据的目录,并配置 hdfs

hduser@hm1:/usr/local/hadoop/etc/hadoop$ cd ~/

hduser@hm1:~$ mkdir -p mydata/hdfs/namenode

hduser@hm1:~$ mkdir -p mydata/hdfs/datanode

编辑 /usr/local/hadoop/etc/hadoop/hdfs-site.xml 文件

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hduser/mydata/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hduser/mydata/hdfs/datanode</value>

</property>

</configuration>

还在当前目录下,好现在格式化 namenode. 结果很长,保留如下:

hduser@hm1:/usr/local/hadoop/etc/hadoop$ hdfs namenode -format

14/02/21 14:40:43 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hm1/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.8.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.5.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.6.1.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common -r 1529768; compiled by ‘hortonmu’ on 2013-10-07T06:28Z

STARTUP_MSG: java = 1.7.0_51

************************************************************/

14/02/21 14:40:43 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /usr/local/hadoop/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It’s highly recommended that you fix the library with ‘execstack -c <libfile>’, or link it with ‘-z noexecstack’.

14/02/21 14:40:45 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Formatting using clusterid: CID-0cd9886e-b262-4504-8915-9ef03bcb7d9e

14/02/21 14:40:45 INFO namenode.HostFileManager: read includes:

HostSet(

)

14/02/21 14:40:45 INFO namenode.HostFileManager: read excludes:

HostSet(

)

14/02/21 14:40:45 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

14/02/21 14:40:45 INFO util.GSet: Computing capacity for map BlocksMap

14/02/21 14:40:45 INFO util.GSet: VM type = 64-bit

14/02/21 14:40:45 INFO util.GSet: 2.0% max memory = 889 MB

14/02/21 14:40:45 INFO util.GSet: capacity = 2^21 = 2097152 entries

14/02/21 14:40:45 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

14/02/21 14:40:45 INFO blockmanagement.BlockManager: defaultReplication = 1

14/02/21 14:40:45 INFO blockmanagement.BlockManager: maxReplication = 512

14/02/21 14:40:45 INFO blockmanagement.BlockManager: minReplication = 1

14/02/21 14:40:45 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

14/02/21 14:40:45 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

14/02/21 14:40:45 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

14/02/21 14:40:45 INFO blockmanagement.BlockManager: encryptDataTransfer = false

14/02/21 14:40:45 INFO namenode.FSNamesystem: fsOwner = hduser (auth:SIMPLE)

14/02/21 14:40:45 INFO namenode.FSNamesystem: supergroup = supergroup

14/02/21 14:40:45 INFO namenode.FSNamesystem: isPermissionEnabled = true

14/02/21 14:40:45 INFO namenode.FSNamesystem: HA Enabled: false

14/02/21 14:40:45 INFO namenode.FSNamesystem: Append Enabled: true

14/02/21 14:40:45 INFO util.GSet: Computing capacity for map INodeMap

14/02/21 14:40:45 INFO util.GSet: VM type = 64-bit

14/02/21 14:40:45 INFO util.GSet: 1.0% max memory = 889 MB

14/02/21 14:40:45 INFO util.GSet: capacity = 2^20 = 1048576 entries

14/02/21 14:40:45 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/02/21 14:40:45 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

14/02/21 14:40:45 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

14/02/21 14:40:45 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

14/02/21 14:40:45 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

14/02/21 14:40:45 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

14/02/21 14:40:45 INFO util.GSet: Computing capacity for map Namenode Retry Cache

14/02/21 14:40:45 INFO util.GSet: VM type = 64-bit

14/02/21 14:40:45 INFO util.GSet: 0.029999999329447746% max memory = 889 MB

14/02/21 14:40:45 INFO util.GSet: capacity = 2^15 = 32768 entries

14/02/21 14:40:45 INFO common.Storage: Storage directory /home/hduser/mydata/hdfs/namenode has been successfully formatted.

14/02/21 14:40:45 INFO namenode.FSImage: Saving image file /home/hduser/mydata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

14/02/21 14:40:45 INFO namenode.FSImage: Image file /home/hduser/mydata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 of size 198 bytes saved in 0 seconds.

14/02/21 14:40:45 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

14/02/21 14:40:45 INFO util.ExitUtil: Exiting with status 0

14/02/21 14:40:45 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hm1/127.0.1.1

************************************************************/

但是别着急,还需要自己编译 64bit 的库,因为 release 发布的居然是 32bit 的,属孙子的。否则会遇到类似下面的警告:

在 hduser 目录下运行命令:

$ start-dfs.sh

14/02/21 14:45:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applic\

able

Starting namenodes on [Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /usr/local/hadoop/lib/native/libhadoop.so.1.0.0 which\

might have disabled stack guard. The VM will try to fix the stack guard now.

It’s highly recommended that you fix the library with ‘execstack -c <libfile>’, or link it with ‘-z noexecstack’.

localhost]

本文介绍安装 Hadoop 2.2.0 single node。

首先准备一个虚拟机,Ubuntu 12.04.4

Java 环境:

root@hm1:~# mvn –version

Apache Maven 3.1.1 (0728685237757ffbf44136acec0402957f723d9a; 2013-09-17 15:22:22+0000)

Maven home: /usr/apache-maven-3.1.1

Java version: 1.7.0_51, vendor: Oracle Corporation

Java home: /usr/lib/jvm/java-7-oracle/jre

Default locale: en_US, platform encoding: UTF-8

OS name: “linux”, version: “3.2.0-59-virtual”, arch: “amd64”, family: “unix”

创建 hadoop 的用户以及组,组 hadoop, 用户名 hduser, 密码 hduser

root@hm1:~# addgroup hadoop

Adding group `hadoop’ (GID 1001) …

Done.

root@hm1:~# adduser –ingroup hadoop hduser

Adding user `hduser’ …

Adding new user `hduser’ (1001) with group `hadoop’ …

Creating home directory `/home/hduser’ …

Copying files from `/etc/skel’ …

Enter new UNIX password:

Retype new UNIX password:

passwd: password updated successfully

Changing the user information for hduser

Enter the new value, or press ENTER for the default

Full Name []:

Room Number []:

Work Phone []:

Home Phone []:

Other []:

Is the information correct? [Y/n] y

添加到 sudo 组中

root@hm1:~# adduser hduser sudo

Adding user `hduser’ to group `sudo’ …

Adding user hduser to group sudo

Done.

为了防止以后用 hduser 使用 sudo 时候遇到如下错误:

hduser is not in the sudoers file. This incident will be reported.

需要用 visudo 命令编辑文件 /etc/sudoers, 添加一行

# Uncomment to allow members of group sudo to not need a password

# %sudo ALL=NOPASSWD: ALL

hduser ALL=(ALL) ALL

退出 root 用户,用 hduser 登录。

ssh hduser@192.168.1.70

为了避免安装脚本提示认证,下面的命令将建立 localhost 访问的证书文件

hduser@hm1:~$ ssh-keygen -t rsa -P ”

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hduser/.ssh/id_rsa):

Created directory ‘/home/hduser/.ssh’.

Your identification has been saved in /home/hduser/.ssh/id_rsa.

Your public key has been saved in /home/hduser/.ssh/id_rsa.pub.

The key fingerprint is:

b8:b6:3d:c2:24:1f:7b:a3:00:88:72:86:76:5a:d8:c2 hduser@hm1

The key’s randomart image is:

+–[RSA 2048]—-+

| |

| |

| |

|ooo . |

|=E++ . S |

|oo=.. o. |

| . .=oo |

| o=o+ |

| o+.o |

+—————–+

hduser@hm1:~$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

hduser@hm1:~$ ssh localhost

The authenticity of host ‘localhost (127.0.0.1)’ can’t be established.

ECDSA key fingerprint is fb:a8:6c:4c:51:57:b2:6d:36:b2:9c:62:94:30:40:a7.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘localhost’ (ECDSA) to the list of known hosts.

Welcome to Ubuntu 12.04.4 LTS (GNU/Linux 3.2.0-59-virtual x86_64)

* Documentation: https://help.ubuntu.com/

Last login: Fri Feb 21 07:59:05 2014 from 192.168.1.5

ssh localhost 如果没有遇到询问密码,就说明上面的设置成功了。

现在下载 hadoop, 下载网址:http://apache.mirrors.lucidnetworks.net/hadoop/common/

现在运行下面的命令下载和修改文件权限

$ cd ~

$ wget http://www.trieuvan.com/apache/hadoop/common/hadoop-2.2.0/hadoop-2.2.0.tar.gz

$ sudo tar vxzf hadoop-2.2.0.tar.gz -C /usr/local

$ cd /usr/local

$ sudo mv hadoop-2.2.0 hadoop

$ sudo chown -R hduser:hadoop hadoop

相关阅读:

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

搭建 Hadoop 环境(在 Winodws 环境下用虚拟机虚拟两个 Ubuntu 系统进行搭建)http://www.linuxidc.com/Linux/2011-12/48894.htm

下载源代码:

wget http://mirror.esocc.com/apache/Hadoop/common/hadoop-2.2.0/hadoop-2.2.0-src.tar.gz

然后解压:

tar zxvf hadoop-2.2.0-src.tar.gz

cd hadoop-2.2.0-src

运行下面的命令开始编译:

~/code/hadoop-2.2.0-src$ mvn package -Pdist,native -DskipTests -Dtar

下载了很多 maven 的东东后,编译报错:

[ERROR] COMPILATION ERROR :

[INFO] ————————————————————-

[ERROR] /home/hduser/code/hadoop-2.2.0-src/hadoop-common-project/hadoop-auth/src/test/java/org/apache/hadoop/security/authentication/client/AuthenticatorTestCase.java:[88,11] error: cannot access AbstractLifeCycle

[ERROR] class file for org.mortbay.component.AbstractLifeCycle not found

/home/hduser/code/hadoop-2.2.0-src/hadoop-common-project/hadoop-auth/src/test/java/org/apache/hadoop/security/authentication/client/AuthenticatorTestCase.java:[96,29] error: cannot access LifeCycle

[ERROR] class file for org.mortbay.component.LifeCycle not found

编辑 hadoop-common-project/hadoop-auth/pom.xml 文件,添加依赖:

<dependency>

<groupId>org.mortbay.jetty</groupId>

<artifactId>jetty-util</artifactId>

<scope>test</scope>

</dependency>

再次编译,这个错误解决了。

之后遇到了没有安装 protocol buffer 库的错误,安装一下:

[INFO] — hadoop-maven-plugins:2.2.0:protoc (compile-protoc) @ hadoop-common —

[WARNING] [protoc, –version] failed: java.io.IOException: Cannot run program “protoc”: error=2, No such file or directory

sudo apt-get install libprotobuf-dev

然后再次运行 maven 命令编译。发现新的错误,原来 Ubuntu 的安装包里面没有带上 protoc 编译器。算了,下源代码自己编译。

hduser@hm1:/usr/src$ sudo wget https://protobuf.googlecode.com/files/protobuf-2.5.0.tar.gz

…

$ sudo ./configure

$ sudo make

$ sudo make check

$ sudo make install

$ sudo ldconfig

$ protoc –version

hduser@hm1:~$ start-dfs.sh

Starting namenodes on [localhost]

localhost: namenode running as process 983. Stop it first.

localhost: datanode running as process 1101. Stop it first.

Starting secondary namenodes [0.0.0.0]

0.0.0.0: secondarynamenode running as process 1346. Stop it first.

再来编译。等 …., 说明 hadoop 不准备 64bit 的库浪费了大家多少时间。

还有错:

Could NOT find OpenSSL, try to set the path to OpenSSL root folder in the

[exec] system variable OPENSSL_ROOT_DIR (missing: OPENSSL_LIBRARIES

安装 openssl 库

sudo apt-get install libssl-dev

再来一次,编译成功了。在目录 /home/hduser/code/hadoop-2.2.0-src/hadoop-dist/target 下有文件:

hadoop-2.2.0.tar.gz

解压后,进入目录,然后复制 native 目录里的东西到制定位置,覆盖 32bit 文件

sudo cp -r ./hadoop-2.2.0/lib/native/* /usr/local/hadoop/lib/native/

现在回到~ 目录,运行下面的命令,成功了。

hduser@hm1:~$ start-dfs.sh

Starting namenodes on [localhost]

localhost: namenode running as process 983. Stop it first.

localhost: datanode running as process 1101. Stop it first.

Starting secondary namenodes [0.0.0.0]

0.0.0.0: secondarynamenode running as process 1346. Stop it first.

hduser@hm1:~$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/logs/yarn-hduser-resourcemanager-hm1.out

localhost: starting nodemanager, logging to /usr/local/hadoop/logs/yarn-hduser-nodemanager-hm1.out

hduser@hm1:~$ jps

32417 Jps

1101 DataNode

1346 SecondaryNameNode

983 NameNode

现在运行例子程序,注意 /etc/hosts 中我配置了

127.0.0.1 hm1

hduser@hm1:~/code/hadoop-2.2.0-src$ cd /usr/local/hadoop/

hduser@hm1:/usr/local/hadoop$ hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar pi 2 5

Number of Maps = 2

Samples per Map = 5

Wrote input for Map #0

Wrote input for Map #1

Starting Job

14/02/21 18:07:29 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

14/02/21 18:07:30 INFO ipc.Client: Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 0 time(s); retry policy is RetryUpToMaximumC\

fountWithFixedSleep(maxRetries=10, sleepTime=1 SECONDS)

14/02/21 18:07:31 INFO ipc.Client: Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 1 time(s); retry policy is RetryUpToMaximumC\

ountWithFixedSleep(maxRetries=10, sleepTime=1 SECONDS)

14/02/21 18:07:32 INFO ipc.Client: Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 2 time(s); retry policy is RetryUpToMaximumC\

ountWithFixedSleep(maxRetries=10, sleepTime=1 SECONDS)

需要在 yarn-site.xml 中添加写配置:

<property>

<name>yarn.resourcemanager.address</name>

<value>127.0.0.1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>127.0.0.1:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>127.0.0.1:8031</value>

</property>

重新启动虚拟机,再次启动服务,现在连接问题解决了。

hduser@hm1:/usr/local/hadoop$ hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar pi 2 5

Number of Maps = 2

Samples per Map = 5

Wrote input for Map #0

Wrote input for Map #1

Starting Job

14/02/21 18:15:53 INFO client.RMProxy: Connecting to ResourceManager at /127.0.0.1:8032

14/02/21 18:15:54 INFO input.FileInputFormat: Total input paths to process : 2

14/02/21 18:15:54 INFO mapreduce.JobSubmitter: number of splits:2

14/02/21 18:15:54 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.specul\

ative

14/02/21 18:15:54 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name

14/02/21 18:15:54 INFO Configuration.deprecation: mapreduce.reduce.class is deprecated. Instead, use mapreduce.job.reduce.class

14/02/21 18:15:54 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.input.dir is deprecated. Instead, use mapreduce.input.fileinputformat.inputdir

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

14/02/21 18:15:54 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class

14/02/21 18:15:54 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir

14/02/21 18:15:54 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1393006528872_0001

14/02/21 18:15:54 INFO impl.YarnClientImpl: Submitted application application_1393006528872_0001 to ResourceManager at /127.0.0.1:8032

14/02/21 18:15:55 INFO mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1393006528872_0001/

14/02/21 18:15:55 INFO mapreduce.Job: Running job: job_1393006528872_0001

如果重来一次的话,顺序应该是先编译 64bit 版本,然后用这个版本进行配置安装。

更多 Hadoop 相关信息见Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13

这次准备多台虚拟机来安装分布式 Hadoop. 官方文档:http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/ClusterSetup.html

Hadoop 的节点分为两大类:masters 和 slaves。

masters 必须是分开 (exclusively) 的两台机器:NameNode 和 ResourceManager,

slaves 包含了 DataNode 和 NodeManager,文档上没有说一定必须互斥。

下面是 YARN 的架构图, 里面没有 NodeManager,YARN 只是 Hadoop 体系的一部分,此图没有包含 HDFS。

HDFS 的信息参考:https://hadoop.apache.org/docs/r2.2.0/hadoop-project-dist/hadoop-hdfs/Federation.html

现在安装 hadoop,做一些基本配置,由于前面已经自己编译了 64 bit 的 Hadoop,现在可以拿来用了。

首先准备一个虚拟机 Ubuntu 12.04.4 server, host name: hd1, IP: 192.168.1.71

然后参考 Ubuntu 12.04 上使用 Hadoop 2.2.0 一 用户权限设置 http://www.linuxidc.com/Linux/2014-02/97076.htm

做相应的设置。不过不要下载 hadoop 的安装包,只要将编译好的 64 bit 的复制到 /usr/local 目录下即可。

然后参考 Ubuntu 12.04 上使用 Hadoop 2.2.0 二 配置 single node server http://www.linuxidc.com/Linux/2014-02/97076p2.htm

设置好环境变量,修改 /usr/local/hadoop/etc/hadoop/hadoop-env.sh 文件后,推出再用 hduser 登录。

这篇文章中提到要修改下面这个文件,但是我不确定,先放在这里记录,暂时不用修改:

Add JAVA_HOME to libexec/hadoop-config.sh at beginning of the file

hduser@solaiv[~]#vi /opt/hadoop-2.2.0/libexec/hadoop-config.sh

….

export JAVA_HOME=/usr/local/jdk1.6.0_18

….

创建 hadoop 使用的临时目录

mkdir $HADOOP_INSTALL/tmp

后面会逐步创建各个 server,都会从这个 VM 克隆。

Hadoop 2.3.0 已经发布,编译方式主要还是参考前文:

Ubuntu 12.04 上使用 Hadoop 2.2.0 三 编译 64 bit 版本 http://www.linuxidc.com/Linux/2014-02/97076p3.htm

不过有一点区别,就是不需要修改 pom.xml 文件了。

jetty 的 bug 已经修复。

准备多个虚拟机

将前文 Ubuntu 上使用 Hadoop 2.x 四 Multi-node cluster 基本设置的 hd1 虚拟机 hostname 修改为 namenode, IP: 192.168.1.71

然后以此克隆出虚拟机 hd2,hostname 名为 resourcemanager, IP: 192.168.1.72, 然后继续克隆出 datanode1, datanode2 和 datanode3

resourcemanager 本文没有用到,可以忽略。

VM name: hd1

hostname: namenode

IP: 192.168.1.71

VM name: hd2

hostname: resourcemanager

IP: 192.168.1.72

VM name: hd3

hostname: datanode1

IP: 192.168.1.73

VM name: hd4

hostname: datanode2

IP: 192.168.1.74

VM name: hd5

hostname: datanode3

IP: 192.168.1.75

配置 /etc/hosts

同时在所有相关系统的 /etc/hosts 文件中添加下面一段配置:

#hdfs cluster

192.168.1.71 namenode

192.168.1.72 resourcemanager

192.168.1.73 datanode1

192.168.1.74 datanode2

192.168.1.75 datanode3

设置 namenode server

core-site.xml

现在设置 namenode,用 hduser 登录后,修改 /usr/local/hadoop/etc/hadoop/core-site.xml 文件如下:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://namenode:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>

说明:

1. 分布式系统中必须用 hostname 而不是 localhost 来设置 namenode 的 URI, 就像上面第一个 property 的配置

namenode 因为已经添加了 /etc/hosts 的那段配置,所以应该能够找到自己的 IP: 192.168.1.71

2. io.file.buffer.size 设置成比默认值 4096 字节 (4K) 大的 131072(128K),也就是说每次传递的文件字节数多很多。适合于大型分布式 HDFS 系统,减少 IO 次数,提高传输效率。

3. core-site.xml 的设置适用于所有 5 台 server。

hdfs-site.xml

好,现在配置 namenode 上的 hdfs-site.xml,参考官方文档:

Configurations for NameNode:

| Parameter | Value | Notes |

|---|---|---|

| dfs.namenode.name.dir | Path on the local filesystem where the NameNode stores the namespace and transactions logs persistently. | If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy. |

| dfs.namenode.hosts /dfs.namenode.hosts.exclude | List of permitted/excluded DataNodes. | If necessary, use these files to control the list of allowable datanodes. |

| dfs.blocksize | 268435456 | HDFS blocksize of 256MB for large file-systems. |

| dfs.namenode.handler.count | 100 | More NameNode server threads to handle RPCs from large number of DataNodes. |

我的配置如下:

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hduser/mydata/hdfs/namenode</value>

</property>

<property>

<name>dfs.namenode.hosts</name>

<value>datanode1,datanode2,datanode3</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

</property>

</configuration>

说明:

1. 第一个是文件复制的数目,有 3 份拷贝,这是默认值,写在这里只是为了说明

2. 第二个是 namenode 元数据文件存放目录

当然要确保目录已经创建:

mkdir -p /home/hduser/mydata/hdfs/namenode

3. 第三个是 datanode 的 hostname 列表。

4. 第四个是 block 大小,适合大文件

5. 第五个是 namenode 的 RPC 服务的线程数目

设置 datanode server

好,配置完 namenode 后,开始配置三个 datanode server,在使用了相同的 /etc/hosts 中的配置后,使用了相同的 core-site.xml 文件配置后,现在配置 hdfs-site.xml 文件

mkdir -p /home/hduser/mydata/hdfs/datanode

<configuration>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hduser/mydata/hdfs/datanode</value>

</property>

</configuration>

启动 server

hdfs namenode -format

启动 namenode service

hduser@namenode:~$ hadoop-daemon.sh –config $HADOOP_CONF_DIR –script hdfs start namenode

starting namenode, logging to /usr/local/hadoop/logs/hadoop-hduser-namenode-namenode.out

然后分别到每一个 datanode server 上执行命令启动 datanode service

hduser@datanode1:~$ hadoop-daemon.sh –config $HADOOP_CONF_DIR –script hdfs start datanode

starting datanode, logging to /usr/local/hadoop/logs/hadoop-hduser-datanode-datanode1.out

hduser@datanode2:~$ hadoop-daemon.sh –config $HADOOP_CONF_DIR –script hdfs start datanode

starting datanode, logging to /usr/local/hadoop/logs/hadoop-hduser-datanode-datanode2.out

hduser@datanode3:~$ hadoop-daemon.sh –config $HADOOP_CONF_DIR –script hdfs start datanode

starting datanode, logging to /usr/local/hadoop/logs/hadoop-hduser-datanode-datanode3.out

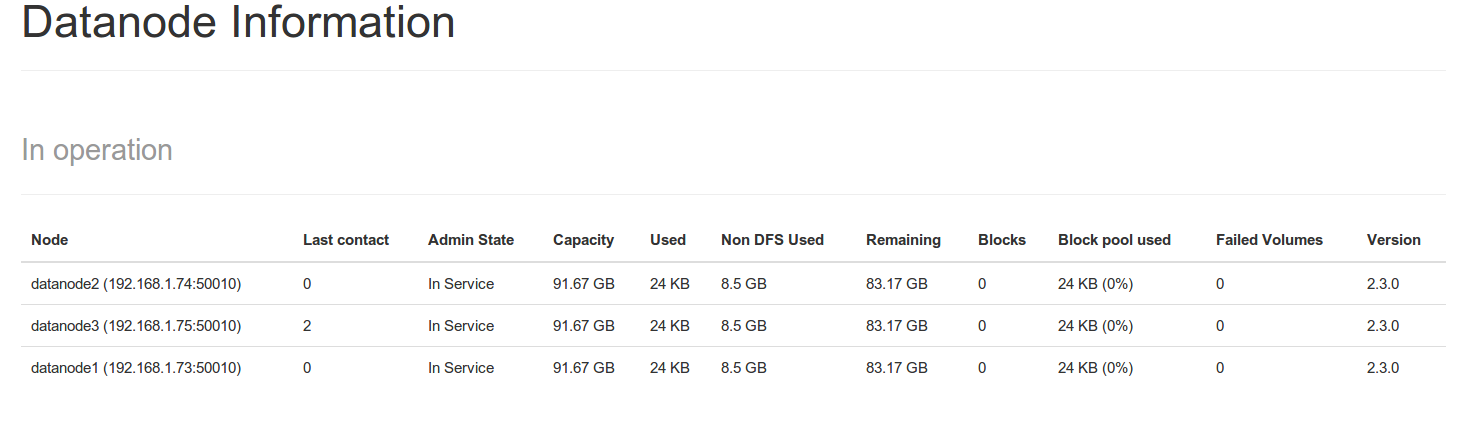

好,目前一个 namenode, 3 个 datanode 全部已经启动。后面的文章将进行测试和优化。

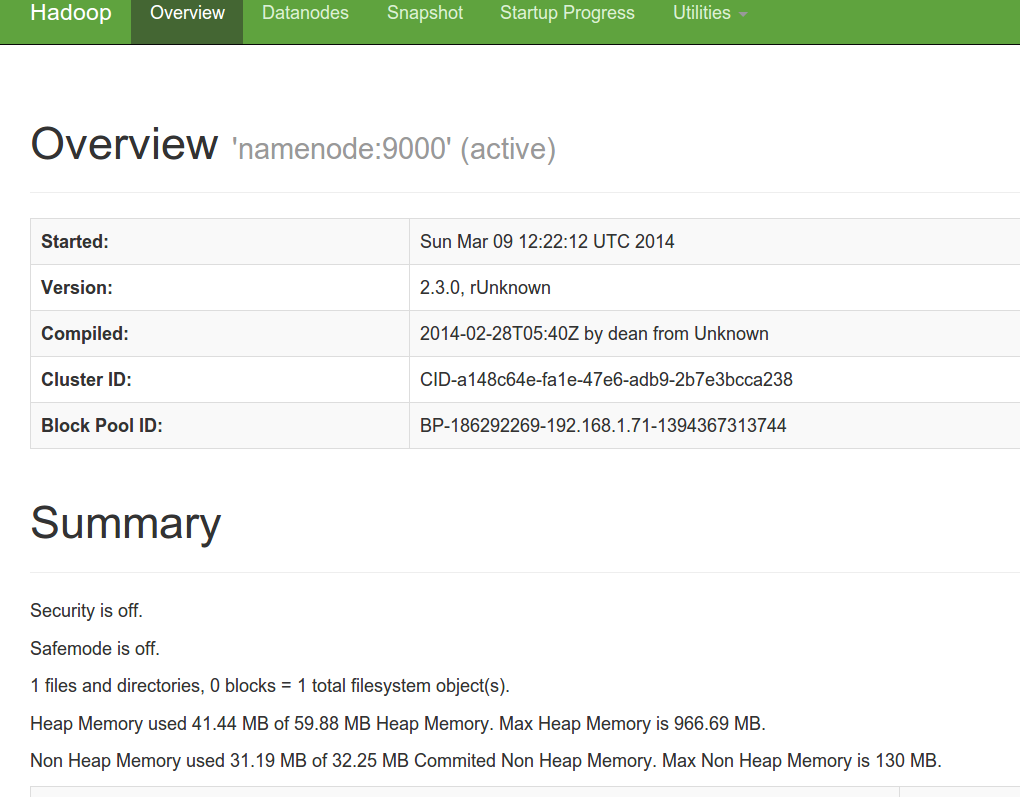

namenode 管理站点

首先 namenode 有一个 web 站点,默认端口号是 50070,下面是我的截屏:

至少说明 namenode 服务启动正常了。

日志

也可以看到复制文件 t.txt 的操作:

2014-03-10 02:43:59,676 INFO org.apache.Hadoop.hdfs.StateChange: BLOCK* allocateBlock: /test/t.txt._COPYING_. BP-186292269-192.168.1.71-1394367313744 blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]}

2014-03-10 02:44:00,147 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.75:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

2014-03-10 02:44:00,150 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.73:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

2014-03-10 02:44:00,153 INFO BlockStateChange: BLOCK* addStoredBlock: blockMap updated: 192.168.1.74:50010 is added to blk_1073741825_1001{blockUCState=UNDER_CONSTRUCTION, primaryNodeIndex=-1, replicas=[ReplicaUnderConstruction[[DISK]DS-4255c8d4-3ca1-4c5a-8184-83310513fb73:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-f988d67f-cdb2-4d57-bd72-0e652527f022:NORMAL|RBW], ReplicaUnderConstruction[[DISK]DS-5f64aac5-bbff-480a-80b8-fee21719cd31:NORMAL|RBW]]} size 0

为什么只看到 datanode1?

原因是 datanode2 和 datanode3 中的 /etc/hosts 中忘记添加配置,加完后再次启动服务,很快网站上看到 3 个 datanode 了。

File System Shell

直接在 namenode server 上运行下面的命令:

- hduser@namenode:~$ hdfs dfs -ls hdfs://namenode:9000/

- Found 1 items

- drwxr-xr-x – hduser supergroup 0 2014-03-10 02:30 hdfs://namenode:9000/test

可以看到,已经成功创建了 test 目录。

官方文档参考:http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/FileSystemShell.html

查找帮助的方式

hdfs –help 可以查看总的命令

查看 dfs 下面具体的命令用法,看下面的例子:

hduser@namenode:~$ hdfs dfs -help cp

-cp [-f] [-p] <src> … <dst>: Copy files that match the file pattern <src> to a

destination. When copying multiple files, the destination

must be a directory. Passing -p preserves access and

modification times, ownership and the mode. Passing -f

overwrites the destination if it already exists.

复制本地文件到 hdfs 中,注意 src 要用 file:/// 前缀

hdfs dfs -cp file:///home/hduser/t.txt hdfs://namenode:9000/test/

这里 t.txt 是一个本地文件。

hduser@namenode:~$ hdfs dfs -ls hdfs://namenode:9000/test

Found 1 items

-rw-r–r– 3 hduser supergroup 4 2014-03-10 02:44 hdfs://namenode:9000/test/t.txt

Hadoop dfsadmin 是命令行的管理工具,查看帮助用如下命令:

hadoop dfsadmin -help

所以 dfsadmin 是 hadoop 程序的一个参数,而不是独立的工具。不过现在版本有点变化,hadoop dfsadmin 的用法已经被废止,改为 hdfs dfsadmin 命令。

下面是查看简单的报告:

hduser@namenode:~$ hdfs dfsadmin -report

Configured Capacity: 295283847168 (275.00 GB)

Present Capacity: 267895083008 (249.50 GB)

DFS Remaining: 267894972416 (249.50 GB)

DFS Used: 110592 (108 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

————————————————-

Datanodes available: 3 (3 total, 0 dead)

Live datanodes:

Name: 192.168.1.73:50010 (datanode1)

Hostname: datanode1

Decommission Status : Normal

Configured Capacity: 98427949056 (91.67 GB)

DFS Used: 36864 (36 KB)

Non DFS Used: 9129578496 (8.50 GB)

DFS Remaining: 89298333696 (83.17 GB)

DFS Used%: 0.00%

DFS Remaining%: 90.72%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Mon Mar 10 03:09:14 UTC 2014

Name: 192.168.1.75:50010 (datanode3)

Hostname: datanode3

Decommission Status : Normal

Configured Capacity: 98427949056 (91.67 GB)

DFS Used: 36864 (36 KB)

Non DFS Used: 9129590784 (8.50 GB)

DFS Remaining: 89298321408 (83.17 GB)

DFS Used%: 0.00%

DFS Remaining%: 90.72%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Mon Mar 10 03:09:16 UTC 2014

Name: 192.168.1.74:50010 (datanode2)

Hostname: datanode2

Decommission Status : Normal

Configured Capacity: 98427949056 (91.67 GB)

DFS Used: 36864 (36 KB)

Non DFS Used: 9129594880 (8.50 GB)

DFS Remaining: 89298317312 (83.17 GB)

DFS Used%: 0.00%

DFS Remaining%: 90.72%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Mon Mar 10 03:09:14 UTC 2014

下面的这个命令可以打印出拓扑结构:

hduser@namenode:~$ hdfs dfsadmin -printTopology

Rack: /default-rack

192.168.1.73:50010 (datanode1)

192.168.1.74:50010 (datanode2)

192.168.1.75:50010 (datanode3)

rack 指的是机架,目前三台 datanode 虚拟机位于一个物理主机上,所以都是 default-rack。以后应该要演化成多个 rack 上的配置。可以用一个物理机模拟一个 rack。

详细参考官方文档:

http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/CommandsManual.html#dfsadmin

下面这个命令参数很有用,可以在 HDFS 集群运行时增加新的 datanode 后,让 namenode 重新读取配置文件里面的 hosts 列表。

| -refreshNodes | Re-read the hosts and exclude files to update the set of Datanodes that are allowed to connect to the Namenode and those that should be decommissioned or recommissioned. |

什么是 Rack Awareness?

考虑大型的 Hadoop 集群,为了保证 datanode 的冗余备份的可靠性,多个 datanode 应该放在在不同的机架,但是放在不同的机架上,也就意味着网络传输要穿过路由器,速度肯定没有一个机架中的 datanode server 之间传递来的快,因此性能有所影响。比较推荐的做法(之前在 MongoDB 相关文档中也看到)是,将两个 datanode servers 放在同一个机架,第三个 datanode server 放置在另一个机架上,如果有多个数据中心,这第三个要放在另一个数据中心。

hadoop 应该通过配置信息清楚的知道 datanode servers 的拓扑结构,然后聪明的做到兼顾性能和可靠性。在读取的时候,尽量在同一个数据中心的同一个机架内读取,而写入时要尽可能的将一份数据的三份拷贝做如下安排,两份写入同一个数据中心同一机架的 datanode servers 中,第三份写入另一个数据中心的某机架的 datanode server 中。

如何设置拓扑信息?

因此 hadoop 需要知道 datanode 的拓扑结构,即每台 datanode server 所在的 data center 和 rack id.

首先准备一个脚本文件,可以接受输入的 IP 地址,然后用. 分割,将第二和第三段取出,第二段作为 data center 的 id,第三段作为 rack id。

#!/bin/bash

# Set rack id based on IP address.

# Assumes network administrator has complete control

# over IP addresses assigned to nodes and they are

# in the 10.x.y.z address space. Assumes that

# IP addresses are distributed hierarchically. e.g.,

# 10.1.y.z is one data center segment and 10.2.y.z is another;

# 10.1.1.z is one rack, 10.1.2.z is another rack in

# the same segment, etc.)

#

# This is invoked with an IP address as its only argument

# get IP address from the input

ipaddr=$1

# select“x.y”and convert it to“x/y”

segments=`echo $ipaddr | cut -f 2,3 -d ‘.’ –output-delimiter=/`

echo /${segments}

运行结果如下:

dean@dean-Ubuntu:~$ ./rack-awareness.sh 192.168.1.10

/168/1

dean@dean-ubuntu:~$ ./rack-awareness.sh 192.167.1.10

/167/1

该脚本来自下面的第一篇参考文章,有点 bug,我将 $0 改为了 $1 即可。该脚本会被 hadoop 调用,接受 IP 地址作为参数,最后返回 datacenter id 和 rack id 组成的拓扑路径,就是类似 ”/167/1″ 的字符串。主要理解了 cut 命令后就很简单了。

这里我自己用 newlisp 实现了同样功能的脚本:

#!/usr/bin/newlisp

(set ‘ip (main-args 2))

(set ‘ip-list (parse ip “.”))

(set ‘r (format “/%s/%s” (ip-list 1) (ip-list 2)))

(println r)

(exit)

这个脚本文件是需要设置给 hadoop 调用的,

需要设置 core-site.xml 文件,官方手册:http://hadoop.apache.org/docs/r2.3.0/hadoop-project-dist/hadoop-common/core-default.xml

注意,如果 data center 的 IP 地址不是按照如上规则,则该脚本是需要修改的。因此不能用于所有情况。

参考文章:

http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HdfSUSErGuide.html#Rack_Awareness

https://issues.apache.org/jira/secure/attachment/12345251/Rack_aware_HDFS_proposal.pdf

为什么需要 Federation

HDFS Federation 能解决一下问题:

1. 支持多个 namespace, 为什么需要多个 namespace 呢,因为一个 namespace 由于 JVM 内存的限制,存放的元数据有限,因此支持的 datanode 数目也有限制。

下面的分析来自另一篇博客(http://www.linuxidc.com/Linux/2012-04/58295.htm),这里转一下:

由于 Namenode 在内存中存储所有的元数据(metadata),因此单个 Namenode 所能存储的对象(文件 + 块)数目受到 Namenode 所在 JVM 的 heap size 的限制。50G 的

heap 能够存储 20 亿(200 million)个对象,这 20 亿个对象支持 4000 个 datanode,12PB 的存储(假设文件平均大小为 40MB)。

随着数据的飞速增长,存储的需求也随之增长。单个 datanode 从 4T 增长到 36T,集群的尺寸增长到 8000 个 datanode。存储的需求从 12PB 增长到大于 100PB。

2. 水平扩展出多个 namenode 后,就可以避免网络架构上的性能瓶颈问题

3. 多个应用可以使用各自的 namenode,从而相互隔离。

不过还是没有解决单点故障问题。

架构图

官方文档:http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/Federation.html

架构图如下:

测试环境

现在准备两个 namenode server: namenode1 和 namenode2, /etc/hosts 里面的配置如下:

#hdfs cluster

192.168.1.71 namenode1

192.168.1.72 namenode2

192.168.1.73 datanode1

192.168.1.74 datanode2

192.168.1.75 datanode3

现在来看看上面 5 台 server 的配置:

namenode1 和 namenode2 的配置

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://namenode1:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>net.topology.node.switch.mapping.impl</name>

<value>org.apache.Hadoop.net.ScriptBasedMapping</value>

<description> The default implementation of the DNSToSwitchMapping. It

invokes a script specified in net.topology.script.file.name to resolve

node names. If the value for net.topology.script.file.name is not set, the

default value of DEFAULT_RACK is returned for all node names.

</description>

</property>

<property>

<name>net.topology.script.file.name</name>

<value>/opt/rack.lsp</value>

</property>

<property>

<name>net.topology.script.number.args</name>

<value>100</value>

<description> The max number of args that the script configured with

net.topology.script.file.name should be run with. Each arg is an

IP address.

</description>

</property>

</configuration>

注意,hdfs://namenode1:9000 在另一个 namenode2 上配置为 hdfs://namenode2:9000

刷新 namenode 的方法是:

hduser@namenode1:~$ refresh-namenodes.sh

Refreshing namenode [namenode1:9000]

Refreshing namenode [namenode2:9000]

拓扑查询仍然可以使用:

hdfs dfsadmin -printTopology

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hduser/mydata/hdfs/namenode</value>

</property>

<property>

<name>dfs.namenode.hosts</name>

<value>datanode1,datanode2,datanode3</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

</property>

<!–hdfs federation begin–>

<property>

<name>dfs.federation.nameservices</name>

<value>ns1,ns2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1</name>

<value>namenode1:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns2</name>

<value>namenode2:9000</value>

</property>

<!–hdfs federation end–>

</configuration>

注意添加了 hdfs federation 的配置,里面有两个 namespaces: ns1 和 ns2,分别位于 namenode1 和 namenode2 上。

slaves 文件

datanode1

datanode2

datanode3

datanode 的配置

core-site.xml

<configuration>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hduser/mydata/hdfs/datanode</value>

</property>

<!–hdfs federation begin–>

<property>

<name>dfs.federation.nameservices</name>

<value>ns1,ns2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns1</name>

<value>namenode1:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ns2</name>

<value>namenode2:9000</value>

</property>

<!–hdfs federation end–>

</configuration>

总体上来说,namenode 的配置比较多,包括 rack awareness 的设置。

现在在两个 namenode 上格式化,并启动:

hdfs namenode -format -clusterId csfreebird

hadoop-daemon.sh –config $HADOOP_CONF_DIR –script hdfs start namenode

因为曾经格式化过 namenode,要回答 y 表示重新格式化。

管理站点

远程命令

export JAVA_HOME=/usr/lib/jvm/java-7-Oracle/

现在再 namenode1 上执行下面的命令,停止所有 hdfs 的服务:

hduser@namenode1:~$ stop-dfs.sh

Stopping namenodes on [namenode1 namenode2]

namenode2: no namenode to stop

namenode1: no namenode to stop

datanode2: no datanode to stop

datanode1: no datanode to stop

datanode3: no datanode to stop

负载均衡

在 namenode1 上可以启动 balancer 程序,采用默认的 node 策略。参考文档:http://hadoop.apache.org/docs/r2.3.0/hadoop-project-dist/hadoop-hdfs/Federation.html#Balancer

hduser@namenode1:~$ hadoop-daemon.sh –config $HADOOP_CONF_DIR –script “$bin”/hdfs start balancer

starting balancer, logging to /usr/local/hadoop/logs/hadoop-hduser-balancer-namenode1.out

错误处理

cluster id 不兼容

如果在启动 datanode 的时候日志中报错:

java.io.IOException: Incompatible clusterIDs就把dfs.datanode.data.dir 配置的目录删除,然后再次启动。