共计 40813 个字符,预计需要花费 103 分钟才能阅读完成。

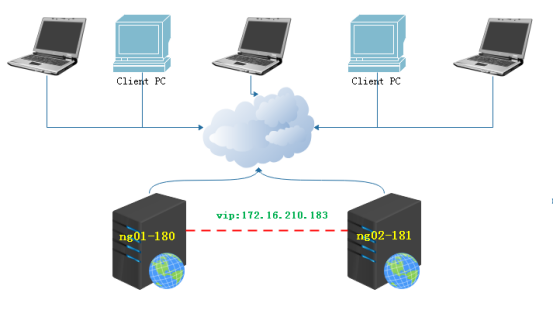

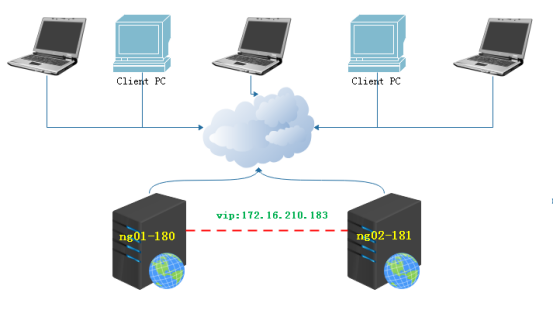

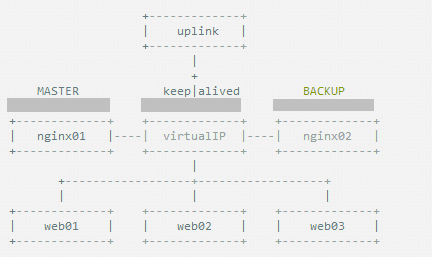

双机高可用一般是通过虚拟 IP(漂移 IP) 方法来实现的,基于 Linux/Unix 的IP别名技术。

双机高可用方法目前分为两种:

1.双机主从模式:即前端使用两台服务器,一台主服务器和一台热备服务器,正常情况下,主服务器绑定一个公网虚拟IP,提供负载均衡服务,热备服务器处于空闲状态;当主服务器发生故障时,热备服务器接管主服务器的公网虚拟IP,提供负载均衡服务;但是热备服务器在主机器不出现故障的时候,永远处于浪费状态,对于服务器不多的网站,该方案不经济实惠。

2.双机主主模式:即前端使用两台负载均衡服务器,互为主备,且都处于活动状态,同事各自绑定一个公网虚拟 IP,提供负载均衡服务;当其中一台发生故障时,另一台接管发生故障服务器的公网虚拟IP( 这时由非故障机器一台负担所有的请求)。这种方案,经济实惠,非常适合于当前架构环境。

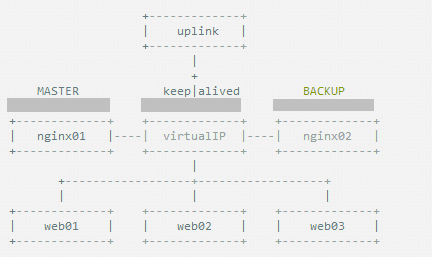

今天再次分享下 Nginx+keeplived 实现高可用负载均衡的主从模式的操作记录:

keeplived可以认为是 VRRP 协议在 Linux 上的实现,主要有三个模块,分别是 core,check 和vrrp。

core模块为 keeplived 的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。

check负责健康检查,包括创建的各种检查方式。

vrrp模块是来实现 VRRP 协议的。

一、环境说明

操作系统:CentOS release 6.9 (Final) minimal

web1:172.16.12.223

web2:172.16.12.224

vip:svn:172.16.12.226

svn:172.16.12.225

二、环境安装

安装 nginx 和keeplived服务 (web1 和web2两台服务器上的安装完全一样)。

2.1、安装依赖

yum clean all

yum -y update

yum -y install gcc-c++ gd libxml2-devel libjpeg-devel libpng-devel net-snmp-devel wget telnet vim zip unzip

yum -y install curl-devel libxslt-devel pcre-devel libjpeg libpng libcurl4-openssl-dev

yum -y install libcurl-devel libcurl freetype-config freetype freetype-devel unixODBC libxslt

yum -y install gcc automake autoconf libtool openssl-devel

yum -y install perl-devel perl-ExtUtils-Embed

yum -y install cmake ncurses-devel.x86_64 openldap-devel.x86_64 lrzsz openssh-clients gcc-g77 bison

yum -y install libmcrypt libmcrypt-devel mhash mhash-devel bzip2 bzip2-devel

yum -y install ntpdate rsync svn patch iptables iptables-services

yum -y install libevent libevent-devel cyrus-sasl cyrus-sasl-devel

yum -y install gd-devel libmemcached-devel memcached git libssl-devel libyaml-devel auto make

yum -y groupinstall "Server Platform Development" "Development tools"

yum -y groupinstall "Development tools"

yum -y install gcc pcre-devel zlib-devel openssl-devel2.2、Centos6系统安装完毕后,需要优化的地方

# 关闭 SELinux

sed -i 's/SELINUX=enforcing/SELinux=disabled/' /etc/selinux/config

grep SELINUX=disabled /etc/selinux/config

setenforce 0

getenforce

cat >> /etc/sysctl.conf << EOF

#

##custom

#

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.tcp_sack = 1

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 262144

net.core.somaxconn = 262144

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_timestamps = 0

#net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_synack_retries = 2

#net.ipv4.tcp_syn_retries = 1

net.ipv4.tcp_syn_retries = 2

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_mem = 94500000 915000000 927000000

#net.ipv4.tcp_fin_timeout = 1

net.ipv4.tcp_fin_timeout = 15

net.ipv4.tcp_keepalive_time = 30

net.ipv4.ip_local_port_range = 1024 65535

#net.ipv4.tcp_tw_len = 1

EOF

#使其生效

sysctl -p

cp /etc/security/limits.conf /etc/security/limits.conf.bak2017

cat >> /etc/security/limits.conf << EOF

#

###custom

#

* soft nofile 20480

* hard nofile 65535

* soft nproc 20480

* hard nproc 65535

EOF2.3、修改 shell 终端的超时时间

vi /etc/profile 增加如下一行即可(3600 秒, 默认不超时)cp /etc/profile /etc/profile.bak2017

cat >> /etc/profile << EOF

export TMOUT=1800

EOF2.4、下载软件包

(master 和 slave 两台负载均衡机都要做)[root@web1 ~]# cd /usr/local/src/

[root@web1 src]# wget http://nginx.org/download/nginx-1.9.7.tar.gz

[root@web1 src]# wget http://www.keepalived.org/software/keepalived-1.3.2.tar.gz2.5、安装nginx

(master 和 slave 两台负载均衡机都要做)[root@web1 src]# tar -zxvf nginx-1.9.7.tar.gz

[root@web1 nginx-1.9.7]# cd nginx-1.9.7

# 添加 www 用户,其中- M 参数表示不添加用户家目录,- s 参数表示指定 shell 类型

[root@web1 nginx-1.9.7]# useradd www -M -s /sbin/nologin

[root@web1 nginx-1.9.7]# vim auto/cc/gcc

#将这句注释掉 取消 Debug 编译模式 大概在 179 行

# debug

# CFLAGS="$CFLAGS -g"

[root@web1 nginx-1.9.7]# ./configure --prefix=/usr/local/nginx --user=www --group=www --with-http_ssl_module --with-http_flv_module --with-http_stub_status_module --with-http_gzip_static_module --with-pcre

[root@web1 nginx-1.9.7]# make && make install2.6、安装keeplived

(master 和 slave 两台负载均衡机都要做)[root@web1 nginx-1.9.7]# cd /usr/local/src/

[root@web1 src]# tar -zvxf keepalived-1.3.2.tar.gz

[root@web1 src]# cd keepalived-1.3.2

[root@web1 keepalived-1.3.2]# ./configure

[root@web1 keepalived-1.3.2]# make && make install

[root@web1 keepalived-1.3.2]# cp /usr/local/src/keepalived-1.3.2/keepalived/etc/init.d/keepalived /etc/rc.d/init.d/

[root@web1 keepalived-1.3.2]# cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/

[root@web1 keepalived-1.3.2]# mkdir /etc/keepalived

[root@web1 keepalived-1.3.2]# cp /usr/local/etc/keepalived/keepalived.conf /etc/keepalived/

[root@web1 keepalived-1.3.2]# cp /usr/local/sbin/keepalived /usr/sbin/

[root@web1 keepalived-1.3.2]# echo "/usr/local/nginx/sbin/nginx" >> /etc/rc.local

[root@web1 keepalived-1.3.2]# echo "/etc/init.d/keepalived start" >> /etc/rc.local三、配置服务

3.1、关闭selinux

先关闭 SElinux、配置防火墙(master 和 slave 两台负载均衡机都要做)[root@web1 keepalived-1.3.2]# cd /root/

[root@web1 ~]#sed -i 's/SELINUX=enforcing/SELinux=disabled/' /etc/selinux/config

[root@web1 ~]#grep SELINUX=disabled /etc/selinux/config

[root@web1 ~]#setenforce 03.2、关闭防火墙

[root@web1 ~]# /etc/init.d/iptables stop3.3、配置nginx

master-和 slave 两台服务器的 nginx 的配置完全一样 , 主要是配置 /usr/local/nginx/conf/nginx.conf 的http,当然也可以配置 vhost 虚拟主机目录,然后配置 vhost 下的比如 LB.conf 文件。

其中 :

多域名指向是通过虚拟主机(配置 http 下面的 server)实现;

同一域名的不同虚拟目录通过每个 server 下面的不同 location 实现 ;

到后端的服务器在 vhost/LB.conf 下面配置 upstream, 然后在 server 或location中通过 proxy_pass 引用。

要实现前面规划的接入方式,LB.conf的配置如下 (添加proxy_cache_path 和proxy_temp_path这两行,表示打开 nginx 的缓存功能):

[root@web1 ~]# vim /usr/local/nginx/conf/nginx.conf

user www;

worker_processes 8;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {worker_connections 65535;

}

http {

include mime.types;

default_type application/octet-stream;

charset utf-8;

######

### set access log format

#######

log_format main '$remote_addr - $remote_user [$time_local]"$request"'

'$status $body_bytes_sent"$http_referer"'

'"$http_user_agent""$http_x_forwarded_for"';

#access_log logs/access.log main;

#######

## http setting

#######

sendfile on;

#tcp_nopush on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

proxy_cache_path /var/www/cache levels=1:2 keys_zone=mycache:20m max_size=2048m inactive=60m;

proxy_temp_path /var/www/cache/tmp;

fastcgi_connect_timeout 3000;

fastcgi_send_timeout 3000;

fastcgi_read_timeout 3000;

fastcgi_buffer_size 256k;

fastcgi_buffers 8 256k;

fastcgi_busy_buffers_size 256k;

fastcgi_temp_file_write_size 256k;

fastcgi_intercept_errors on;

#keepalive_timeout 0;

#keepalive_timeout 65;

#

client_header_timeout 600s;

client_body_timeout 600s;

# client_max_body_size 50m;

client_max_body_size 100m; #允许客户端请求的最大单个文件字节数

client_body_buffer_size 256k; #缓冲区代理缓冲请求的最大字节数,可以理解为先保存到本地再传给用户

#gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_comp_level 9;

gzip_types text/plain application/x-javascript text/css application/xml text/javascript application/x-httpd-php;

gzip_vary on;

## includes vhosts

include vhosts/*.conf;

}

# 创建相��的目录

[root@web1 ~]# mkdir -p /usr/local/nginx/conf/vhosts

[root@web1 ~]# mkdir -p /var/www/cache

[root@web1 ~]# ulimit 65535

[root@web2 ~]# vim /usr/local/nginx/conf/vhosts/LB.conf

upstream LB-WWW {

ip_hash;

server 172.16.12.223:80 max_fails=3 fail_timeout=30s; #max_fails = 3 为允许失败的次数,默认值为 1

server 172.16.12.224:80 max_fails=3 fail_timeout=30s; #fail_timeout = 30s 当 max_fails 次失败后,暂停将请求分发到该后端服务器的时间

server 172.16.12.225:80 max_fails=3 fail_timeout=30s;

}

upstream LB-OA {

ip_hash;

server 172.16.12.223:8080 max_fails=3 fail_timeout=30s;

server 172.16.12.224:8080 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

server_name localhost;

access_log /usr/local/nginx/logs/dev-access.log main;

error_log /usr/local/nginx/logs/dev-error.log;

location /svn {proxy_pass http://172.16.12.226/svn/;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300; #跟后端服务器连接超时时间,发起握手等候响应时间

proxy_send_timeout 300; #后端服务器回传时间,就是在规定时间内后端服务器必须传完所有数据

proxy_read_timeout 600; #连接成功后等待后端服务器的响应时间,已经进入后端的排队之中等候处理

proxy_buffer_size 256k; #代理请求缓冲区, 会保存用户的头信息以供 nginx 进行处理

proxy_buffers 4 256k; #同上,告诉 nginx 保存单个用几个 buffer 最大用多少空间

proxy_busy_buffers_size 256k; #如果系统很忙时候可以申请最大的 proxy_buffers

proxy_temp_file_write_size 256k; #proxy 缓存临时文件的大小

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

location /submin {proxy_pass http://172.16.12.226/submin/;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300;

proxy_send_timeout 300;

proxy_read_timeout 600;

proxy_buffer_size 256k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

}

server {

listen 80;

server_name localhost;

access_log /usr/local/nginx/logs/www-access.log main;

error_log /usr/local/nginx/logs/www-error.log;

location / {proxy_pass http://LB-WWW;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300;

proxy_send_timeout 300;

proxy_read_timeout 600;

proxy_buffer_size 256k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

}

server {

listen 80;

server_name localhost;

access_log /usr/local/nginx/logs/oa-access.log main;

error_log /usr/local/nginx/logs/oa-error.log;

location / {proxy_pass http://LB-OA;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300;

proxy_send_timeout 300;

proxy_read_timeout 600;

proxy_buffer_size 256k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

} 3.4、验证准备

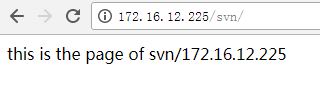

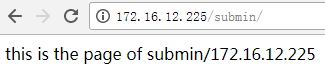

3.4.1、在 svn 服务器上执行

cat >/usr/local/nginx/conf/vhosts/svn.conf <<EOF

server {listen 80;

server_name svn 172.16.12.225;

access_log /usr/local/nginx/logs/svn-access.log main;

error_log /usr/local/nginx/logs/svn-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

EOF

[root@svn ~]# cat /usr/local/nginx/conf/vhosts/svn.conf

server {listen 80;

server_name svn 172.16.12.225;

access_log /usr/local/nginx/logs/svn-access.log main;

error_log /usr/local/nginx/logs/svn-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@svn ~]#

[root@svn ~]# mkdir -p /var/www/html

[root@svn ~]# mkdir -p /var/www/html/submin

[root@svn ~]# mkdir -p /var/www/html/svn

[root@svn ~]# cat /var/www/html/svn/index.html

this is the page of svn/172.16.12.225

[root@svn ~]# cat /var/www/html/submin/index.html

this is the page of submin/172.16.12.225

[root@svn ~]# chown -R www.www /var/www/html/

[root@svn ~]# chmod -R 755 /var/www/html/

[root@svn ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.12.223 web1

172.16.12.224 web2

172.16.12.225 svn

[root@svn ~]# tail -4 /etc/rc.local

touch /var/lock/subsys/local

/etc/init.d/iptables stop

/usr/local/nginx/sbin/nginx

/etc/init.d/keepalived start

# 启动 nginx

[root@svn ~]# /usr/local/nginx/sbin/nginx

# 访问网址

[root@svn local]# curl http://172.16.12.225/submin/

this is the page of submin/172.16.12.225

[root@svn local]# curl http://172.16.12.225/svn/

this is the page of svn/172.16.12.2253.4.1、在 web1 上执行

[root@web1 ~]# curl http://172.16.12.225/submin/

this is the page of submin/172.16.12.225

[root@web1 ~]# curl http://172.16.12.225/svn/

this is the page of svn/172.16.12.225

cat >/usr/local/nginx/conf/vhosts/web.conf <<EOF

server {listen 80;

server_name web 172.16.12.223;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

EOF

[root@web1 ~]# cat /usr/local/nginx/conf/vhosts/web.conf

server {listen 80;

server_name web 172.16.12.223;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@web1 ~]# mkdir -p /var/www/html

[root@web1 ~]# mkdir -p /var/www/html/web

[root@web1 ~]# cat /var/www/html/web/index.html

this is the page of web/172.16.12.223

[root@web1 ~]# chown -R www.www /var/www/html/

[root@web1 ~]# chmod -R 755 /var/www/html/

[root@web1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.12.223 web1

172.16.12.224 web2

172.16.12.225 svn

[root@web1 ~]# tail -4 /etc/rc.local

touch /var/lock/subsys/local

/etc/init.d/iptables stop

/usr/local/nginx/sbin/nginx

/etc/init.d/keepalived start

[root@web1 ~]# /usr/local/nginx/sbin/nginx

[root@web1 ~]# curl http://172.16.12.223/web/

this is the page of web/172.16.12.2232.4.2、在 web2 上执行

[root@web2 ~]# curl http://172.16.12.225/submin/

this is the page of submin/172.16.12.225

[root@web2 ~]# curl http://172.16.12.225/svn/

this is the page of svn/172.16.12.225

cat >/usr/local/nginx/conf/vhosts/web.conf <<EOF

server {listen 80;

server_name web 172.16.12.224;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

EOF

[root@web2 ~]# cat /usr/local/nginx/conf/vhosts/web.conf

server {listen 80;

server_name web 172.16.12.224;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@web2 ~]#

[root@web2 ~]# mkdir -p /var/www/html

[root@web2 ~]# mkdir -p /var/www/html/web

[root@web2 ~]# cat /var/www/html/web/index.html

this is the page of web/172.16.12.224

[root@web2 ~]# cat /var/www/html/web/index.html

this is the page of web/172.16.12.224

[root@web2 ~]# chown -R www.www /var/www/html/

[root@web2 ~]# chmod -R 755 /var/www/html/

[root@web2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.12.223 web1

172.16.12.224 web2

172.16.12.225 svn

[root@web2 ~]# tail -4 /etc/rc.local

touch /var/lock/subsys/local

/etc/init.d/iptables stop

/usr/local/nginx/sbin/nginx

/etc/init.d/keepalived start

# 启动 nginx

[root@web2 ~]# /usr/local/nginx/sbin/nginx

# 访问网址

[root@web2 local]# curl http://172.16.12.224/web/

this is the page of web/172.16.12.2242.4.3、浏览器测试

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2017-12/149670p2.htm

四、keeplived 配置

4.1、web1上的操作

[root@web1 ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@web1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived #全局定义

global_defs {# notification_email { #指定 keepalived 在发生事件时 (比如切换) 发送通知邮件的邮箱

# ops@wangshibo.cn #设置报警邮件地址,可以设置多个,每行一个。需开启本机的 sendmail 服务

# tech@wangshibo.cn

# }

#

# notification_email_from ops@wangshibo.cn #keepalived 在发生诸如切换操作时需要发送 email 通知地址

# smtp_server 127.0.0.1 #指定发送 email 的 smtp 服务器

# smtp_connect_timeout 30 #设置连接 smtp server 的超时时间

router_id master-node #运行 keepalived 的机器的一个标识,通常可设为 hostname。故障发生时,发邮件时显示在邮件主题中的信息。}

vrrp_script chk_http_port { #检测 nginx 服务是否在运行。有很多方式,比如进程,用脚本检测等等

script "/opt/chk_nginx.sh" #这里通过脚本监测

interval 2 #脚本执行间隔,每 2s 检测一次

weight -5 #脚本结果导致的优先级变更,检测失败(脚本返回非 0)则优先级 -5

fall 2 #检测连续 2 次失败才算确定是真失败。会用 weight 减少优先级(1-255 之间)rise 1 #检测 1 次成功就算成功。但不修改优先级

}

vrrp_instance VI_1 {#keepalived 在同一 virtual_router_id 中 priority(0-255)最大的会成为 master,也就是接管 VIP,当 priority 最大的主机发生故障后次 priority 将会接管

state MASTER #指定 keepalived 的角色,MASTER 表示此主机是主服务器,BACKUP 表示此主机是备用服务器。注意这里的 state 指定 instance(Initial)的初始状态,就是说在配置好后,这台服务器的初始状态就是这里指定的,但这里指定的不算,还是得要通过竞选通过优先级来确定。如果这里设置为 MASTER,但如若他的优先级不及另外一台,那么这台在发送通告时,会发送自己的优先级,另外一台发现优先级不如自己的高,那么他会就回抢占为 MASTER

interface eth1 #指定 HA 监测网络的接口。实例绑定的网卡,因为在配置虚拟 IP 的时候必须是在已有的网卡上添加的

# mcast_src_ip 103.110.98.14 # 发送多播数据包时的源 IP 地址,这里注意了,这里实际上就是在哪个地址上发送 VRRP 通告,这个非常重要,一定要选择稳定的网卡端口来发送,这里相当于 heartbeat 的心跳端口,如果没有设置那么就用默认的绑定的网卡的 IP,也就是 interface 指定的 IP 地址

virtual_router_id 226 #虚拟路由标识,这个标识是一个数字,同一个 vrrp 实例使用唯一的标识。即同一 vrrp_instance 下,MASTER 和 BACKUP 必须是一致的

priority 101 #定义优先级,数字越大,优先级越高,在同一个 vrrp_instance 下,MASTER 的优先级必须大于 BACKUP 的优先级

advert_int 1 #设定 MASTER 与 BACKUP 负载均衡器之间同步检查的时间间隔,单位是秒

authentication { #设置验证类型和密码。主从必须一样

auth_type PASS #设置 vrrp 验证类型,主要有 PASS 和 AH 两种

auth_pass 1111 #设置 vrrp 验证密码,在同一个 vrrp_instance 下,MASTER 与 BACKUP 必须使用相同的密码才能正常通信

}

virtual_ipaddress { #VRRP HA 虚拟地址 如果有多个 VIP,继续换行填写

172.16.12.226

}

track_script { #执行监控的服务。注意这个设置不能紧挨着写在 vrrp_script 配置块的后面(实验中碰过的坑),否则 nginx 监控失效!!chk_http_port #引用 VRRP 脚本,即在 vrrp_script 部分指定的名字。定期运行它们来改变优先级,并最终引发主备切换。}

}4.2、web2上的操作

[root@web2 ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@web2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# notification_email {

# ops@wangshibo.cn

# tech@wangshibo.cn

# }

#

# notification_email_from ops@wangshibo.cn

# smtp_server 127.0.0.1

# smtp_connect_timeout 30

router_id slave-node

}

vrrp_script chk_http_port {script "/opt/chk_nginx.sh"

interval 2

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth1

# mcast_src_ip 103.110.98.24

virtual_router_id 226

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {172.16.12.226

}

track_script {chk_http_port}

}4.3、监控说明

让 keepalived 监控 NginX 的状态:

1)经过前面的配置,如果 master 主服务器的 keepalived 停止服务,slave从服务器会自动接管 VIP 对外服务;

一旦主服务器的 keepalived 恢复,会重新接管 VIP。但这并不是我们需要的,我们需要的是当NginX 停止服务的时候能够自动切换。

2)keepalived支持配置监控脚本,我们可以通过脚本监控 NginX 的状态,如果状态不正常则进行一系列的操作,最终仍不能恢复 NginX 则杀掉keepalived,使得从服务器能够接管服务。

如何监控 NginX 的状态

最简单的做法是监控 NginX 进程 , 更靠谱的做法是检查 NginX 端口 , 最靠谱的做法是检查多个 url 能否获取到页面。

注意:这里要提示一下 keepalived.conf 中vrrp_script配置区的 script 一般有 2 种写法:

1)通过脚本执行的返回结果,改变优先级,keepalived继续发送通告消息,backup比较优先级再决定。这是直接监控 Nginx 进程的方式。

2)脚本里面检测到异常,直接关闭 keepalived 进程,backup机器接收不到 advertisement 会抢占 IP。这是检查NginX 端口的方式。

上文 script 配置部分,“killall -0 nginx”属于第 1 种情况 ,“/opt/chk_nginx.sh” 属于第 2 种情况 。个人更倾向于通过shell 脚本判断,但有异常时 exit 1,正常退出exit 0,然后keepalived 根据动态调整的 vrrp_instance 优先级选举决定是否抢占 VIP:

如果脚本执行结果为 0,并且weight 配置的值大于 0,则优先级相应的增加

如果脚本执行结果非 0,并且weight 配置的值小于 0,则优先级相应的减少

其他情况,原本配置的优先级不变,即配置文件中 priority 对应的值。

提示:

优先级不会不断的提高或者降低

可以编写多个检测脚本并为每个检测脚本设置不同的 weight(在配置中列出就行)

不管提高优先级还是降低优先级,最终优先级的范围是在 [1,254],不会出现优先级小于等于0 或者优先级大于等于 255 的情况

在MASTER节点的 vrrp_instance 中 配置 nopreempt ,当它异常恢复后,即使它 prio 更高也不会抢占,这样可以避免正常情况下做无谓的切换

以上可以做到利用脚本检测业务进程的状态,并动态调整优先级从而实现主备切换。

另外:在默认的 keepalive.conf 里面还有 virtual_server,real_server 这样的配置,我们这用不到,它是为 lvs 准备的。

如何尝试恢复服务

由于 keepalived 只检测本机和他机 keepalived 是否正常并实现 VIP 的漂移,而如果本机 nginx 出现故障不会则不会漂移 VIP。

所以编写脚本来判断本机 nginx 是否正常,如果发现 NginX 不正常,重启之。等待 3 秒再次校验,仍然失败则不再尝试,关闭keepalived,其他主机此时会接管VIP;

根据上述策略很容易写出监控脚本。此脚本必须在 keepalived 服务运行的前提下才有效!如果在 keepalived 服务先关闭的情况下,那么 nginx 服务关闭后就不能实现自启动了。

该脚本检测 ngnix 的运行状态,并在 nginx 进程不存在时尝试重新启动ngnix,如果启动失败则停止keepalived,准备让其它机器接管。

4.4、监控脚本

监控脚本如下(master 和 slave 都要有这个监控脚本):[root@web1 ~]# cat /opt/chk_nginx.sh

#!/bin/bash

counter=$(ps -C nginx --no-heading|wc -l)

if ["${counter}" = "0" ]; then

/usr/local/nginx/sbin/nginx

sleep 2

counter=$(ps -C nginx --no-heading|wc -l)

if ["${counter}" = "0" ]; then

/etc/init.d/keepalived stop

fi

fi

[root@web1 ~]#

[root@web1 ~]# chmod 755 /opt/chk_nginx.sh

[root@web1 ~]# sh /opt/chk_nginx.sh

[root@web2 ~]# cat /opt/chk_nginx.sh

#!/bin/bash

counter=$(ps -C nginx --no-heading|wc -l)

if ["${counter}" = "0" ]; then

/usr/local/nginx/sbin/nginx

sleep 2

counter=$(ps -C nginx --no-heading|wc -l)

if ["${counter}" = "0" ]; then

/etc/init.d/keepalived stop

fi

fi

[root@web2 ~]#

[root@web2 ~]# chmod 755 /opt/chk_nginx.sh

[root@web2 ~]# sh /opt/chk_nginx.sh4.5、需要考虑的问题

此架构需考虑的问题

1)master 没挂,则 master 占有 vip 且nginx运行在 master 上

2)master挂了,则 slave 抢占 vip 且在 slave 上运行 nginx 服务

3)如果master 上的 nginx 服务挂了,则 nginx 会自动重启,重启失败后会自动关闭 keepalived,这样vip 资源也会转移到 slave 上。

4)检测后端服务器的健康状态

5)master 和slave两边都开启 nginx 服务,无论 master 还是 slave,当其中的一个keepalived 服务停止后,vip都会漂移到 keepalived 服务还在的节点上;

如果要想使 nginx 服务挂了,vip也漂移到另一个节点,则必须用脚本或者在配置文件里面用 shell 命令来控制。(nginx服务宕停后会自动启动,启动失败后会强制关闭 keepalived,从而致使vip 资源漂移到另一台机器上)

五、最后验证

最后验证(将配置的后端应用域名都解析到 VIP 地址上):关闭主服务器上的 keepalived 或nginx,vip都会自动飘到从服务器上。

验证 keepalived 服务故障情况:

1)先后在 master、slave 服务器上启动 nginx 和keepalived,保证这两个服务都正常开启:

[root@web2 ~]# /usr/local/nginx/sbin/nginx -s stop

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

[root@web2 ~]# /etc/init.d/keepalived stop

Stopping keepalived: [FAILED]

[root@web2 ~]#

[root@web1 ~]# /usr/local/nginx/sbin/nginx -s stop

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

[root@web1 ~]# /etc/init.d/keepalived stop

Stopping keepalived: [FAILED]

[root@web1 ~]#

[root@web1 ~]# /usr/local/nginx/sbin/nginx

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

[root@web1 ~]# /etc/init.d/keepalived start

Starting keepalived: [OK]2)在主服务器上查看是否已经绑定了虚拟IP:

[root@web1 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:ca:99:56 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.223/24 brd 10.0.2.255 scope global eth0

inet 172.16.12.226/32 scope global eth0

inet6 fe80::a00:27ff:feca:9956/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:b3:a9:36 brd ff:ff:ff:ff:ff:ff

inet 172.16.12.223/24 brd 172.16.12.255 scope global eth1

inet6 fe80::a00:27ff:feb3:a936/64 scope link

valid_lft forever preferred_lft forever

[root@web2 ~]# /usr/local/nginx/sbin/nginx

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

nginx: [warn] conflicting server name "localhost" on 0.0.0.0:80, ignored

[root@web2 ~]# /etc/init.d/keepalived start

Starting keepalived: [OK]

[root@web2 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:9a:0b:97 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.224/24 brd 10.0.2.255 scope global eth0

inet6 fe80::a00:27ff:fe9a:b97/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:63:26:1a brd ff:ff:ff:ff:ff:ff

inet 172.16.12.224/24 brd 172.16.12.255 scope global eth1

inet6 fe80::a00:27ff:fe63:261a/64 scope link

valid_lft forever preferred_lft forever

[root@web2 ~]# 5.1、修改网站配置

[root@web1 ~]# cat /usr/local/nginx/conf/vhosts/web.conf

server {listen 80;

server_name localhost 172.16.12.223 172.16.12.226;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@web1 ~]#

[root@web2 ~]# cat /usr/local/nginx/conf/vhosts/web.conf

server {listen 80;

server_name localhost 172.16.12.224 172.16.12.226;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@web2 ~]#5.2、访问验证

5.3、停止主服务器的 keeplived 服务

[root@web1 ~]# /etc/init.d/keepalived stop

Stopping keepalived: [OK]

[root@web1 ~]#

[root@web1 ~]# tail -f /var/log/messages

Dec 14 13:32:12 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:32:12 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:32:12 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:32:12 web1 Keepalived_healthcheckers[7958]: Netlink reflector reports IP 172.16.12.226 added

Dec 14 13:32:17 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:32:17 web1 Keepalived_vrrp[7959]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 172.16.12.226

Dec 14 13:32:17 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:32:17 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:32:17 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:32:17 web1 Keepalived_vrrp[7959]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:43:51 web1 Keepalived[7956]: Stopping

Dec 14 13:43:51 web1 Keepalived_vrrp[7959]: VRRP_Instance(VI_1) sent 0 priority

Dec 14 13:43:51 web1 Keepalived_vrrp[7959]: VRRP_Instance(VI_1) removing protocol VIPs.

Dec 14 13:43:51 web1 Keepalived_healthcheckers[7958]: Netlink reflector reports IP 172.16.12.226 removed

Dec 14 13:43:51 web1 Keepalived_healthcheckers[7958]: Stopped

Dec 14 13:43:52 web1 Keepalived_vrrp[7959]: Stopped

Dec 14 13:43:52 web1 Keepalived[7956]: Stopped Keepalived v1.3.2 (12/14,2017)

[root@web1 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:ca:99:56 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.223/24 brd 10.0.2.255 scope global eth0

inet6 fe80::a00:27ff:feca:9956/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:b3:a9:36 brd ff:ff:ff:ff:ff:ff

inet 172.16.12.223/24 brd 172.16.12.255 scope global eth1

inet6 fe80::a00:27ff:feb3:a936/64 scope link

valid_lft forever preferred_lft forever

[root@web1 ~]#5.4、在 web2 查看切换情况

[root@web2 ~]# ip add

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:9a:0b:97 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.224/24 brd 10.0.2.255 scope global eth0

inet 172.16.12.226/32 scope global eth0

inet6 fe80::a00:27ff:fe9a:b97/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:63:26:1a brd ff:ff:ff:ff:ff:ff

inet 172.16.12.224/24 brd 172.16.12.255 scope global eth1

inet6 fe80::a00:27ff:fe63:261a/64 scope link

valid_lft forever preferred_lft forever

[root@web2 ~]#

[root@web2 ~]# tail -f /var/log/messages

Dec 14 13:47:33 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:47:33 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:47:33 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:47:33 web2 Keepalived_healthcheckers[8186]: Netlink reflector reports IP 172.16.12.226 added

Dec 14 13:47:38 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:47:38 web2 Keepalived_vrrp[8187]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 172.16.12.226

Dec 14 13:47:38 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:47:38 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.226

Dec 14 13:47:38 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.226

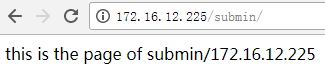

Dec 14 13:47:38 web2 Keepalived_vrrp[8187]: Sending gratuitous ARP on eth0 for 172.16.12.2265.5、访问网页验证

切换前的网页:

切换后的网页:

说明已经切换完毕。

本文永久更新链接地址:http://www.linuxidc.com/Linux/2017-12/149670.htm

双机高可用一般是通过虚拟 IP(漂移 IP) 方法来实现的,基于 Linux/Unix 的IP别名技术。

双机高可用方法目前分为两种:

1.双机主从模式:即前端使用两台服务器,一台主服务器和一台热备服务器,正常情况下,主服务器绑定一个公网虚拟IP,提供负载均衡服务,热备服务器处于空闲状态;当主服务器发生故障时,热备服务器接管主服务器的公网虚拟IP,提供负载均衡服务;但是热备服务器在主机器不出现故障的时候,永远处于浪费状态,对于服务器不多的网站,该方案不经济实惠。

2.双机主主模式:即前端使用两台负载均衡服务器,互为主备,且都处于活动状态,同事各自绑定一个公网虚拟 IP,提供负载均衡服务;当其中一台发生故障时,另一台接管发生故障服务器的公网虚拟IP( 这时由非故障机器一台负担所有的请求)。这种方案,经济实惠,非常适合于当前架构环境。

今天再次分享下 Nginx+keeplived 实现高可用负载均衡的主从模式的操作记录:

keeplived可以认为是 VRRP 协议在 Linux 上的实现,主要有三个模块,分别是 core,check 和vrrp。

core模块为 keeplived 的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。

check负责健康检查,包括创建的各种检查方式。

vrrp模块是来实现 VRRP 协议的。

一、环境说明

操作系统:CentOS release 6.9 (Final) minimal

web1:172.16.12.223

web2:172.16.12.224

vip:svn:172.16.12.226

svn:172.16.12.225

二、环境安装

安装 nginx 和keeplived服务 (web1 和web2两台服务器上的安装完全一样)。

2.1、安装依赖

yum clean all

yum -y update

yum -y install gcc-c++ gd libxml2-devel libjpeg-devel libpng-devel net-snmp-devel wget telnet vim zip unzip

yum -y install curl-devel libxslt-devel pcre-devel libjpeg libpng libcurl4-openssl-dev

yum -y install libcurl-devel libcurl freetype-config freetype freetype-devel unixODBC libxslt

yum -y install gcc automake autoconf libtool openssl-devel

yum -y install perl-devel perl-ExtUtils-Embed

yum -y install cmake ncurses-devel.x86_64 openldap-devel.x86_64 lrzsz openssh-clients gcc-g77 bison

yum -y install libmcrypt libmcrypt-devel mhash mhash-devel bzip2 bzip2-devel

yum -y install ntpdate rsync svn patch iptables iptables-services

yum -y install libevent libevent-devel cyrus-sasl cyrus-sasl-devel

yum -y install gd-devel libmemcached-devel memcached git libssl-devel libyaml-devel auto make

yum -y groupinstall "Server Platform Development" "Development tools"

yum -y groupinstall "Development tools"

yum -y install gcc pcre-devel zlib-devel openssl-devel2.2、Centos6系统安装完毕后,需要优化的地方

# 关闭 SELinux

sed -i 's/SELINUX=enforcing/SELinux=disabled/' /etc/selinux/config

grep SELINUX=disabled /etc/selinux/config

setenforce 0

getenforce

cat >> /etc/sysctl.conf << EOF

#

##custom

#

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.tcp_sack = 1

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 262144

net.core.somaxconn = 262144

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_timestamps = 0

#net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_synack_retries = 2

#net.ipv4.tcp_syn_retries = 1

net.ipv4.tcp_syn_retries = 2

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_mem = 94500000 915000000 927000000

#net.ipv4.tcp_fin_timeout = 1

net.ipv4.tcp_fin_timeout = 15

net.ipv4.tcp_keepalive_time = 30

net.ipv4.ip_local_port_range = 1024 65535

#net.ipv4.tcp_tw_len = 1

EOF

#使其生效

sysctl -p

cp /etc/security/limits.conf /etc/security/limits.conf.bak2017

cat >> /etc/security/limits.conf << EOF

#

###custom

#

* soft nofile 20480

* hard nofile 65535

* soft nproc 20480

* hard nproc 65535

EOF2.3、修改 shell 终端的超时时间

vi /etc/profile 增加如下一行即可(3600 秒, 默认不超时)cp /etc/profile /etc/profile.bak2017

cat >> /etc/profile << EOF

export TMOUT=1800

EOF2.4、下载软件包

(master 和 slave 两台负载均衡机都要做)[root@web1 ~]# cd /usr/local/src/

[root@web1 src]# wget http://nginx.org/download/nginx-1.9.7.tar.gz

[root@web1 src]# wget http://www.keepalived.org/software/keepalived-1.3.2.tar.gz2.5、安装nginx

(master 和 slave 两台负载均衡机都要做)[root@web1 src]# tar -zxvf nginx-1.9.7.tar.gz

[root@web1 nginx-1.9.7]# cd nginx-1.9.7

# 添加 www 用户,其中- M 参数表示不添加用户家目录,- s 参数表示指定 shell 类型

[root@web1 nginx-1.9.7]# useradd www -M -s /sbin/nologin

[root@web1 nginx-1.9.7]# vim auto/cc/gcc

#将这句注释掉 取消 Debug 编译模式 大概在 179 行

# debug

# CFLAGS="$CFLAGS -g"

[root@web1 nginx-1.9.7]# ./configure --prefix=/usr/local/nginx --user=www --group=www --with-http_ssl_module --with-http_flv_module --with-http_stub_status_module --with-http_gzip_static_module --with-pcre

[root@web1 nginx-1.9.7]# make && make install2.6、安装keeplived

(master 和 slave 两台负载均衡机都要做)[root@web1 nginx-1.9.7]# cd /usr/local/src/

[root@web1 src]# tar -zvxf keepalived-1.3.2.tar.gz

[root@web1 src]# cd keepalived-1.3.2

[root@web1 keepalived-1.3.2]# ./configure

[root@web1 keepalived-1.3.2]# make && make install

[root@web1 keepalived-1.3.2]# cp /usr/local/src/keepalived-1.3.2/keepalived/etc/init.d/keepalived /etc/rc.d/init.d/

[root@web1 keepalived-1.3.2]# cp /usr/local/etc/sysconfig/keepalived /etc/sysconfig/

[root@web1 keepalived-1.3.2]# mkdir /etc/keepalived

[root@web1 keepalived-1.3.2]# cp /usr/local/etc/keepalived/keepalived.conf /etc/keepalived/

[root@web1 keepalived-1.3.2]# cp /usr/local/sbin/keepalived /usr/sbin/

[root@web1 keepalived-1.3.2]# echo "/usr/local/nginx/sbin/nginx" >> /etc/rc.local

[root@web1 keepalived-1.3.2]# echo "/etc/init.d/keepalived start" >> /etc/rc.local三、配置服务

3.1、关闭selinux

先关闭 SElinux、配置防火墙(master 和 slave 两台负载均衡机都要做)[root@web1 keepalived-1.3.2]# cd /root/

[root@web1 ~]#sed -i 's/SELINUX=enforcing/SELinux=disabled/' /etc/selinux/config

[root@web1 ~]#grep SELINUX=disabled /etc/selinux/config

[root@web1 ~]#setenforce 03.2、关闭防火墙

[root@web1 ~]# /etc/init.d/iptables stop3.3、配置nginx

master-和 slave 两台服务器的 nginx 的配置完全一样 , 主要是配置 /usr/local/nginx/conf/nginx.conf 的http,当然也可以配置 vhost 虚拟主机目录,然后配置 vhost 下的比如 LB.conf 文件。

其中 :

多域名指向是通过虚拟主机(配置 http 下面的 server)实现;

同一域名的不同虚拟目录通过每个 server 下面的不同 location 实现 ;

到后端的服务器在 vhost/LB.conf 下面配置 upstream, 然后在 server 或location中通过 proxy_pass 引用。

要实现前面规划的接入方式,LB.conf的配置如下 (添加proxy_cache_path 和proxy_temp_path这两行,表示打开 nginx 的缓存功能):

[root@web1 ~]# vim /usr/local/nginx/conf/nginx.conf

user www;

worker_processes 8;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {worker_connections 65535;

}

http {

include mime.types;

default_type application/octet-stream;

charset utf-8;

######

### set access log format

#######

log_format main '$remote_addr - $remote_user [$time_local]"$request"'

'$status $body_bytes_sent"$http_referer"'

'"$http_user_agent""$http_x_forwarded_for"';

#access_log logs/access.log main;

#######

## http setting

#######

sendfile on;

#tcp_nopush on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

proxy_cache_path /var/www/cache levels=1:2 keys_zone=mycache:20m max_size=2048m inactive=60m;

proxy_temp_path /var/www/cache/tmp;

fastcgi_connect_timeout 3000;

fastcgi_send_timeout 3000;

fastcgi_read_timeout 3000;

fastcgi_buffer_size 256k;

fastcgi_buffers 8 256k;

fastcgi_busy_buffers_size 256k;

fastcgi_temp_file_write_size 256k;

fastcgi_intercept_errors on;

#keepalive_timeout 0;

#keepalive_timeout 65;

#

client_header_timeout 600s;

client_body_timeout 600s;

# client_max_body_size 50m;

client_max_body_size 100m; #允许客户端请求的最大单个文件字节数

client_body_buffer_size 256k; #缓冲区代理缓冲请求的最大字节数,可以理解为先保存到本地再传给用户

#gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_comp_level 9;

gzip_types text/plain application/x-javascript text/css application/xml text/javascript application/x-httpd-php;

gzip_vary on;

## includes vhosts

include vhosts/*.conf;

}

# 创建相��的目录

[root@web1 ~]# mkdir -p /usr/local/nginx/conf/vhosts

[root@web1 ~]# mkdir -p /var/www/cache

[root@web1 ~]# ulimit 65535

[root@web2 ~]# vim /usr/local/nginx/conf/vhosts/LB.conf

upstream LB-WWW {

ip_hash;

server 172.16.12.223:80 max_fails=3 fail_timeout=30s; #max_fails = 3 为允许失败的次数,默认值为 1

server 172.16.12.224:80 max_fails=3 fail_timeout=30s; #fail_timeout = 30s 当 max_fails 次失败后,暂停将请求分发到该后端服务器的时间

server 172.16.12.225:80 max_fails=3 fail_timeout=30s;

}

upstream LB-OA {

ip_hash;

server 172.16.12.223:8080 max_fails=3 fail_timeout=30s;

server 172.16.12.224:8080 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

server_name localhost;

access_log /usr/local/nginx/logs/dev-access.log main;

error_log /usr/local/nginx/logs/dev-error.log;

location /svn {proxy_pass http://172.16.12.226/svn/;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300; #跟后端服务器连接超时时间,发起握手等候响应时间

proxy_send_timeout 300; #后端服务器回传时间,就是在规定时间内后端服务器必须传完所有数据

proxy_read_timeout 600; #连接成功后等待后端服务器的响应时间,已经进入后端的排队之中等候处理

proxy_buffer_size 256k; #代理请求缓冲区, 会保存用户的头信息以供 nginx 进行处理

proxy_buffers 4 256k; #同上,告诉 nginx 保存单个用几个 buffer 最大用多少空间

proxy_busy_buffers_size 256k; #如果系统很忙时候可以申请最大的 proxy_buffers

proxy_temp_file_write_size 256k; #proxy 缓存临时文件的大小

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

location /submin {proxy_pass http://172.16.12.226/submin/;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300;

proxy_send_timeout 300;

proxy_read_timeout 600;

proxy_buffer_size 256k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

}

server {

listen 80;

server_name localhost;

access_log /usr/local/nginx/logs/www-access.log main;

error_log /usr/local/nginx/logs/www-error.log;

location / {proxy_pass http://LB-WWW;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300;

proxy_send_timeout 300;

proxy_read_timeout 600;

proxy_buffer_size 256k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

}

server {

listen 80;

server_name localhost;

access_log /usr/local/nginx/logs/oa-access.log main;

error_log /usr/local/nginx/logs/oa-error.log;

location / {proxy_pass http://LB-OA;

proxy_redirect off ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 300;

proxy_send_timeout 300;

proxy_read_timeout 600;

proxy_buffer_size 256k;

proxy_buffers 4 256k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404;

proxy_max_temp_file_size 128m;

proxy_cache mycache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

} 3.4、验证准备

3.4.1、在 svn 服务器上执行

cat >/usr/local/nginx/conf/vhosts/svn.conf <<EOF

server {listen 80;

server_name svn 172.16.12.225;

access_log /usr/local/nginx/logs/svn-access.log main;

error_log /usr/local/nginx/logs/svn-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

EOF

[root@svn ~]# cat /usr/local/nginx/conf/vhosts/svn.conf

server {listen 80;

server_name svn 172.16.12.225;

access_log /usr/local/nginx/logs/svn-access.log main;

error_log /usr/local/nginx/logs/svn-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@svn ~]#

[root@svn ~]# mkdir -p /var/www/html

[root@svn ~]# mkdir -p /var/www/html/submin

[root@svn ~]# mkdir -p /var/www/html/svn

[root@svn ~]# cat /var/www/html/svn/index.html

this is the page of svn/172.16.12.225

[root@svn ~]# cat /var/www/html/submin/index.html

this is the page of submin/172.16.12.225

[root@svn ~]# chown -R www.www /var/www/html/

[root@svn ~]# chmod -R 755 /var/www/html/

[root@svn ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.12.223 web1

172.16.12.224 web2

172.16.12.225 svn

[root@svn ~]# tail -4 /etc/rc.local

touch /var/lock/subsys/local

/etc/init.d/iptables stop

/usr/local/nginx/sbin/nginx

/etc/init.d/keepalived start

# 启动 nginx

[root@svn ~]# /usr/local/nginx/sbin/nginx

# 访问网址

[root@svn local]# curl http://172.16.12.225/submin/

this is the page of submin/172.16.12.225

[root@svn local]# curl http://172.16.12.225/svn/

this is the page of svn/172.16.12.2253.4.1、在 web1 上执行

[root@web1 ~]# curl http://172.16.12.225/submin/

this is the page of submin/172.16.12.225

[root@web1 ~]# curl http://172.16.12.225/svn/

this is the page of svn/172.16.12.225

cat >/usr/local/nginx/conf/vhosts/web.conf <<EOF

server {listen 80;

server_name web 172.16.12.223;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

EOF

[root@web1 ~]# cat /usr/local/nginx/conf/vhosts/web.conf

server {listen 80;

server_name web 172.16.12.223;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@web1 ~]# mkdir -p /var/www/html

[root@web1 ~]# mkdir -p /var/www/html/web

[root@web1 ~]# cat /var/www/html/web/index.html

this is the page of web/172.16.12.223

[root@web1 ~]# chown -R www.www /var/www/html/

[root@web1 ~]# chmod -R 755 /var/www/html/

[root@web1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.12.223 web1

172.16.12.224 web2

172.16.12.225 svn

[root@web1 ~]# tail -4 /etc/rc.local

touch /var/lock/subsys/local

/etc/init.d/iptables stop

/usr/local/nginx/sbin/nginx

/etc/init.d/keepalived start

[root@web1 ~]# /usr/local/nginx/sbin/nginx

[root@web1 ~]# curl http://172.16.12.223/web/

this is the page of web/172.16.12.2232.4.2、在 web2 上执行

[root@web2 ~]# curl http://172.16.12.225/submin/

this is the page of submin/172.16.12.225

[root@web2 ~]# curl http://172.16.12.225/svn/

this is the page of svn/172.16.12.225

cat >/usr/local/nginx/conf/vhosts/web.conf <<EOF

server {listen 80;

server_name web 172.16.12.224;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

EOF

[root@web2 ~]# cat /usr/local/nginx/conf/vhosts/web.conf

server {listen 80;

server_name web 172.16.12.224;

access_log /usr/local/nginx/logs/web-access.log main;

error_log /usr/local/nginx/logs/web-error.log;

location / {root /var/www/html;

index index.html index.php index.htm;

}

}

[root@web2 ~]#

[root@web2 ~]# mkdir -p /var/www/html

[root@web2 ~]# mkdir -p /var/www/html/web

[root@web2 ~]# cat /var/www/html/web/index.html

this is the page of web/172.16.12.224

[root@web2 ~]# cat /var/www/html/web/index.html

this is the page of web/172.16.12.224

[root@web2 ~]# chown -R www.www /var/www/html/

[root@web2 ~]# chmod -R 755 /var/www/html/

[root@web2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.12.223 web1

172.16.12.224 web2

172.16.12.225 svn

[root@web2 ~]# tail -4 /etc/rc.local

touch /var/lock/subsys/local

/etc/init.d/iptables stop

/usr/local/nginx/sbin/nginx

/etc/init.d/keepalived start

# 启动 nginx

[root@web2 ~]# /usr/local/nginx/sbin/nginx

# 访问网址

[root@web2 local]# curl http://172.16.12.224/web/

this is the page of web/172.16.12.2242.4.3、浏览器测试

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2017-12/149670p2.htm