共计 10912 个字符,预计需要花费 28 分钟才能阅读完成。

经过一下午的尝试,终于把这个集群的搭建好了,搭完感觉也没有太大的必要,就当是学习了吧,为之后搭建真实环境做基础。

以下搭建的是一个 Ha-Federation-hdfs+Yarn 的集群部署。

首先讲一下我的配置:

四个节点上的启动的分别是:

1.linuxidc117:active namenode,

2.linuxidc118 standby namenode,journalnode,datanode

3.linuxidc119 active namenode,journalnode,datanode

4.linuxidc120 standby namenode,journalnode,datanode

这样做纯粹是因为电脑 hold 不住那么虚拟机了,其实这里所有的节点都应该在不同的服务器上。简单的说,就是 117 和 119 做 active namenode,118 和 120 做 standby namenode,在 118.119.120 上分别放 datanode 和 journalnode。

此处省略一万字,各种配置好之后。。遇到的问题和记录如下:

1. 启动 journalnode,这个 journalnode 话说我也不是太明白他是干嘛的~~,后续研究吧。在各个节点上启动 journalnode:

[linuxidc@linuxidc118 Hadoop-2.6.0]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/linuxidc/hadoop-2.6.0/logs/hadoop-linuxidc-journalnode-linuxidc118.linuxidc.out

[linuxidc@linuxidc118 hadoop-2.6.0]$ jps

11447 JournalNode

11485 Jps

2. 格式化 namenode 时报错:(最后查出来是没有关防火墙。。。免密码登陆不代表不用关防火墙)

15/08/20 02:12:45 INFO ipc.Client: Retrying connect to server: linuxidc119/192.168.75.119:8485. Already tried 8 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

15/08/20 02:12:46 INFO ipc.Client: Retrying connect to server: linuxidc118/192.168.75.118:8485. Already tried 8 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

15/08/20 02:12:46 INFO ipc.Client: Retrying connect to server: linuxidc120/192.168.75.120:8485. Already tried 9 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

15/08/20 02:12:46 INFO ipc.Client: Retrying connect to server: linuxidc119/192.168.75.119:8485. Already tried 9 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

15/08/20 02:12:46 WARN namenode.NameNode: Encountered exception during format:

org.apache.hadoop.hdfs.qjournal.client.QuorumException: Unable to check if JNs are ready for formatting. 2 exceptions thrown:

192.168.75.120:8485: No Route to Host from 43.49.49.59.broad.ty.sx.dynamic.163data.com.cn/59.49.49.43 to linuxidc120:8485 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

192.168.75.119:8485: No Route to Host from 43.49.49.59.broad.ty.sx.dynamic.163data.com.cn/59.49.49.43 to linuxidc119:8485 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

at org.apache.hadoop.hdfs.qjournal.client.QuorumException.create(QuorumException.java:81)

at org.apache.hadoop.hdfs.qjournal.client.QuorumCall.rethrowException(QuorumCall.java:223)

at org.apache.hadoop.hdfs.qjournal.client.QuorumJournalManager.hasSomeData(QuorumJournalManager.java:232)

at org.apache.hadoop.hdfs.server.common.Storage.confirmFormat(Storage.java:884)

at org.apache.hadoop.hdfs.server.namenode.FSImage.confirmFormat(FSImage.java:171)

at org.apache.hadoop.hdfs.server.namenode.NameNode.format(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1379)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1504)

15/08/20 02:12:47 INFO ipc.Client: Retrying connect to server: linuxidc118/192.168.75.118:8485. Already tried 9 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

15/08/20 02:12:47 FATAL namenode.NameNode: Failed to start namenode.

org.apache.hadoop.hdfs.qjournal.client.QuorumException: Unable to check if JNs are ready for formatting. 2 exceptions thrown:

192.168.75.120:8485: No Route to Host from 43.49.49.59.broad.ty.sx.dynamic.163data.com.cn/59.49.49.43 to linuxidc120:8485 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

192.168.75.119:8485: No Route to Host from 43.49.49.59.broad.ty.sx.dynamic.163data.com.cn/59.49.49.43 to linuxidc119:8485 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

at org.apache.hadoop.hdfs.qjournal.client.QuorumException.create(QuorumException.java:81)

at org.apache.hadoop.hdfs.qjournal.client.QuorumCall.rethrowException(QuorumCall.java:223)

at org.apache.hadoop.hdfs.qjournal.client.QuorumJournalManager.hasSomeData(QuorumJournalManager.java:232)

at org.apache.hadoop.hdfs.server.common.Storage.confirmFormat(Storage.java:884)

at org.apache.hadoop.hdfs.server.namenode.FSImage.confirmFormat(FSImage.java:171)

at org.apache.hadoop.hdfs.server.namenode.NameNode.format(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1379)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1504)

15/08/20 02:12:47 INFO util.ExitUtil: Exiting with status 1

15/08/20 02:12:47 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at 43.49.49.59.broad.ty.sx.dynamic.163data.com.cn/59.49.49.43

格式化成功!

[linuxidc@linuxidc117 hadoop-2.6.0]$ bin/hdfs namenode -format -clusterId hadoop-cluster

15/08/20 02:22:05 INFO namenode.FSNamesystem: Append Enabled: true

15/08/20 02:22:06 INFO util.GSet: Computing capacity for map INodeMap

15/08/20 02:22:06 INFO util.GSet: VM type = 64-bit

15/08/20 02:22:06 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB

15/08/20 02:22:06 INFO util.GSet: capacity = 2^20 = 1048576 entries

15/08/20 02:22:06 INFO namenode.NameNode: Caching file names occuring more than 10 times

15/08/20 02:22:06 INFO util.GSet: Computing capacity for map cachedBlocks

15/08/20 02:22:06 INFO util.GSet: VM type = 64-bit

15/08/20 02:22:06 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB

15/08/20 02:22:06 INFO util.GSet: capacity = 2^18 = 262144 entries

15/08/20 02:22:06 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

15/08/20 02:22:06 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

15/08/20 02:22:06 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

15/08/20 02:22:06 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

15/08/20 02:22:06 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

15/08/20 02:22:06 INFO util.GSet: Computing capacity for map NameNodeRetryCache

15/08/20 02:22:06 INFO util.GSet: VM type = 64-bit

15/08/20 02:22:06 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

15/08/20 02:22:06 INFO util.GSet: capacity = 2^15 = 32768 entries

15/08/20 02:22:06 INFO namenode.NNConf: ACLs enabled? false

15/08/20 02:22:06 INFO namenode.NNConf: XAttrs enabled? true

15/08/20 02:22:06 INFO namenode.NNConf: Maximum size of an xattr: 16384

15/08/20 02:22:08 INFO namenode.FSImage: Allocated new BlockPoolId: BP-971817124-192.168.75.117-1440062528650

15/08/20 02:22:08 INFO common.Storage: Storage directory /home/linuxidc/hadoop/hdfs/name has been successfully formatted.

15/08/20 02:22:10 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

15/08/20 02:22:10 INFO util.ExitUtil: Exiting with status 0

15/08/20 02:22:10 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at linuxidc117/192.168.75.117

************************************************************/

3. 开启 namenode:

[linuxidc@linuxidc117 hadoop-2.6.0]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /home/linuxidc/hadoop-2.6.0/logs/hadoop-linuxidc-namenode-linuxidc117.out

[linuxidc@linuxidc117 hadoop-2.6.0]$ jps

18550 NameNode

18604 Jps

4. 格式化 standby namenode

[linuxidc@linuxidc119 hadoop-2.6.0]$ bin/hdfs namenode -bootstrapStandby

15/08/20 02:36:26 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = linuxidc119/192.168.75.119

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.6.0

…..

…..

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by ‘jenkins’ on 2014-11-13T21:10Z

STARTUP_MSG: java = 1.8.0_51

************************************************************/

15/08/20 02:36:26 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

15/08/20 02:36:26 INFO namenode.NameNode: createNameNode [-bootstrapStandby]

=====================================================

About to bootstrap Standby ID nn2 from:

Nameservice ID: hadoop-cluster1

Other Namenode ID: nn1

Other NN’s HTTP address: http://linuxidc117:50070

Other NN’s IPC address: linuxidc117/192.168.75.117:8020

Namespace ID: 1244139539

Block pool ID: BP-971817124-192.168.75.117-1440062528650

Cluster ID: hadoop-cluster

Layout version: -60

=====================================================

15/08/20 02:36:28 INFO common.Storage: Storage directory /home/linuxidc/hadoop/hdfs/name has been successfully formatted.

15/08/20 02:36:29 INFO namenode.TransferFsImage: Opening connection to http://linuxidc117:50070/imagetransfer?getimage=1&txid=0&storageInfo=-60:1244139539:0:hadoop-cluster

15/08/20 02:36:30 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds

15/08/20 02:36:30 INFO namenode.TransferFsImage: Transfer took 0.01s at 0.00 KB/s

15/08/20 02:36:30 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 352 bytes.

15/08/20 02:36:30 INFO util.ExitUtil: Exiting with status 0

15/08/20 02:36:30 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at linuxidc119/192.168.75.119

************************************************************/

5. 开启 standby namenode

[linuxidc@linuxidc119 hadoop-2.6.0]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /home/linuxidc/hadoop-2.6.0/logs/hadoop-linuxidc-namenode-linuxidc119.out

[linuxidc@linuxidc119 hadoop-2.6.0]$ jps

14401 JournalNode

15407 NameNode

15455 Jps

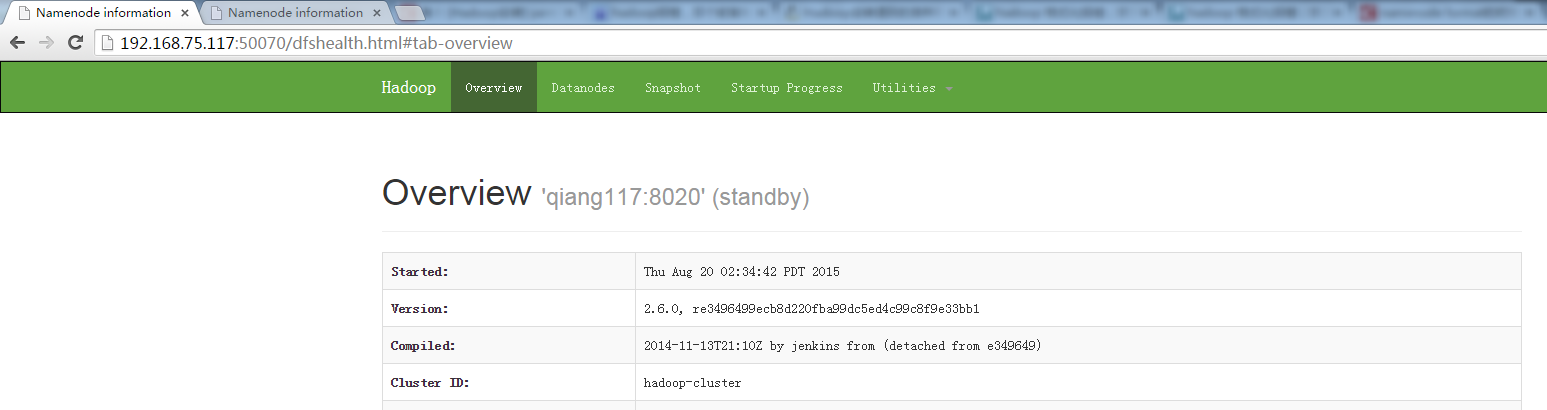

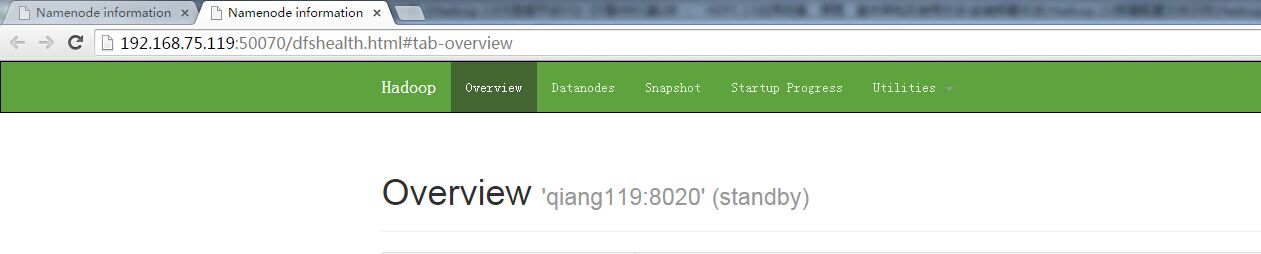

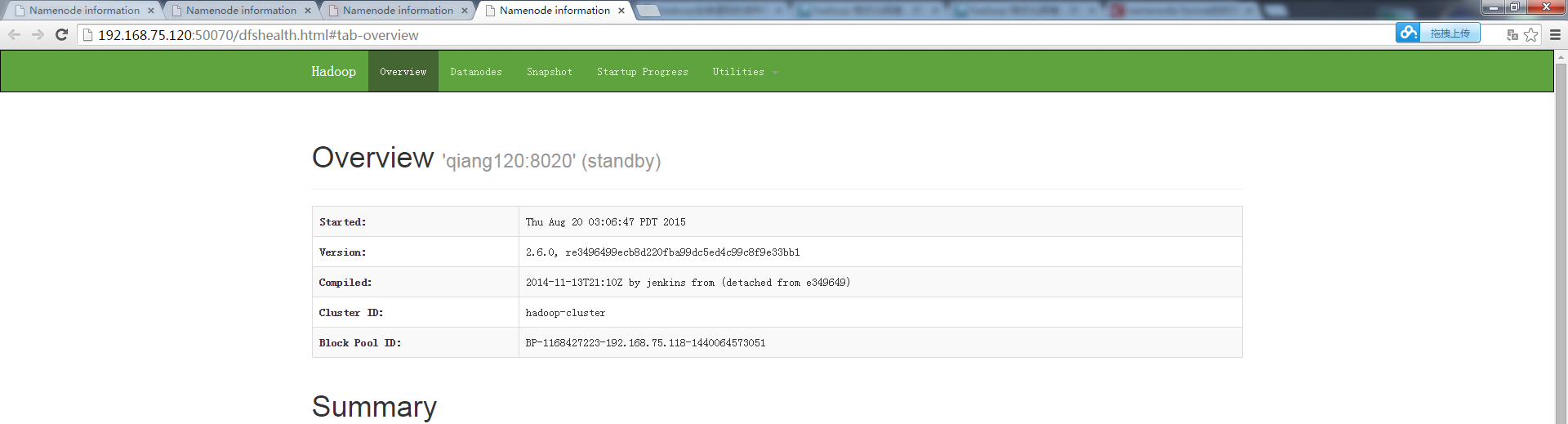

在 web 上 打开以后二个显示都是 standy 状态:

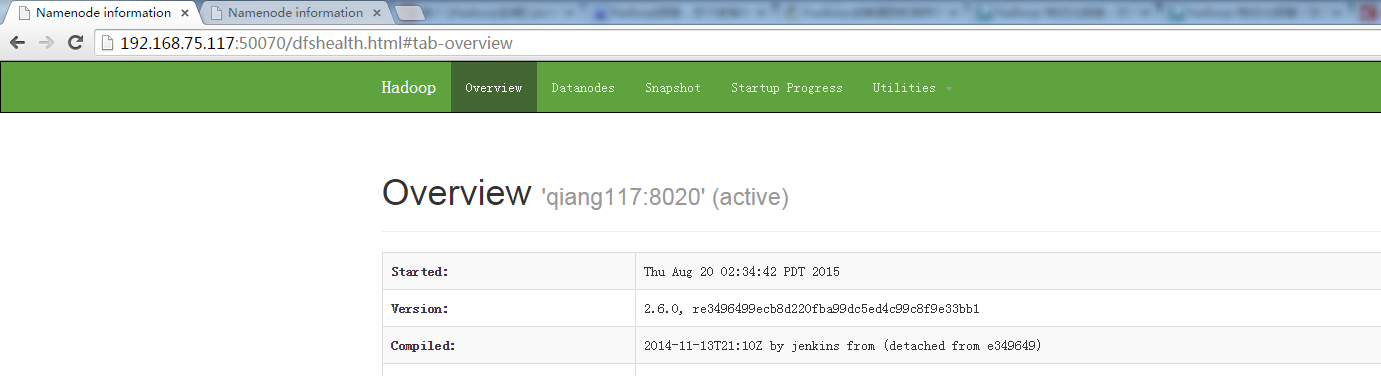

使用这个命令将 nn1 切换为 active 状态:

bin/hdfs haadmin -ns hadoop-cluster1 -transitionToActive nn1

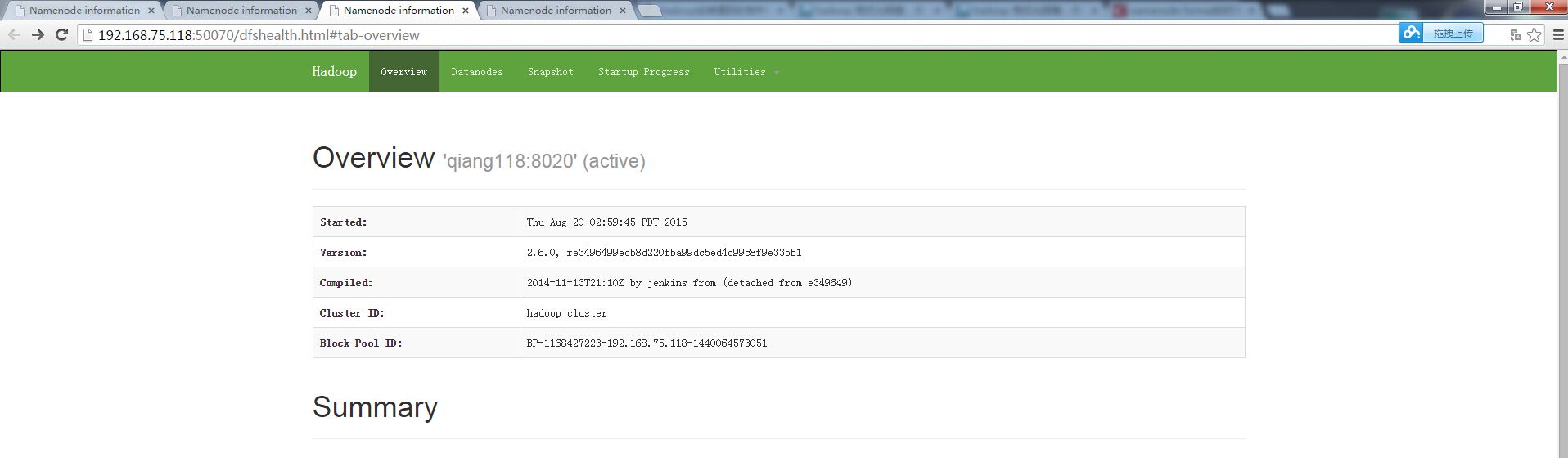

另外两个一样的道理:

开启所有的 datanode,这里是在只有配置好 ssh 免密码登录的情况下才能使用。可以参考:http://www.linuxidc.com/Linux/2015-08/122259.htm

[linuxidc@linuxidc117 hadoop-2.6.0]$ sbin/hadoop-daemons.sh start datanode

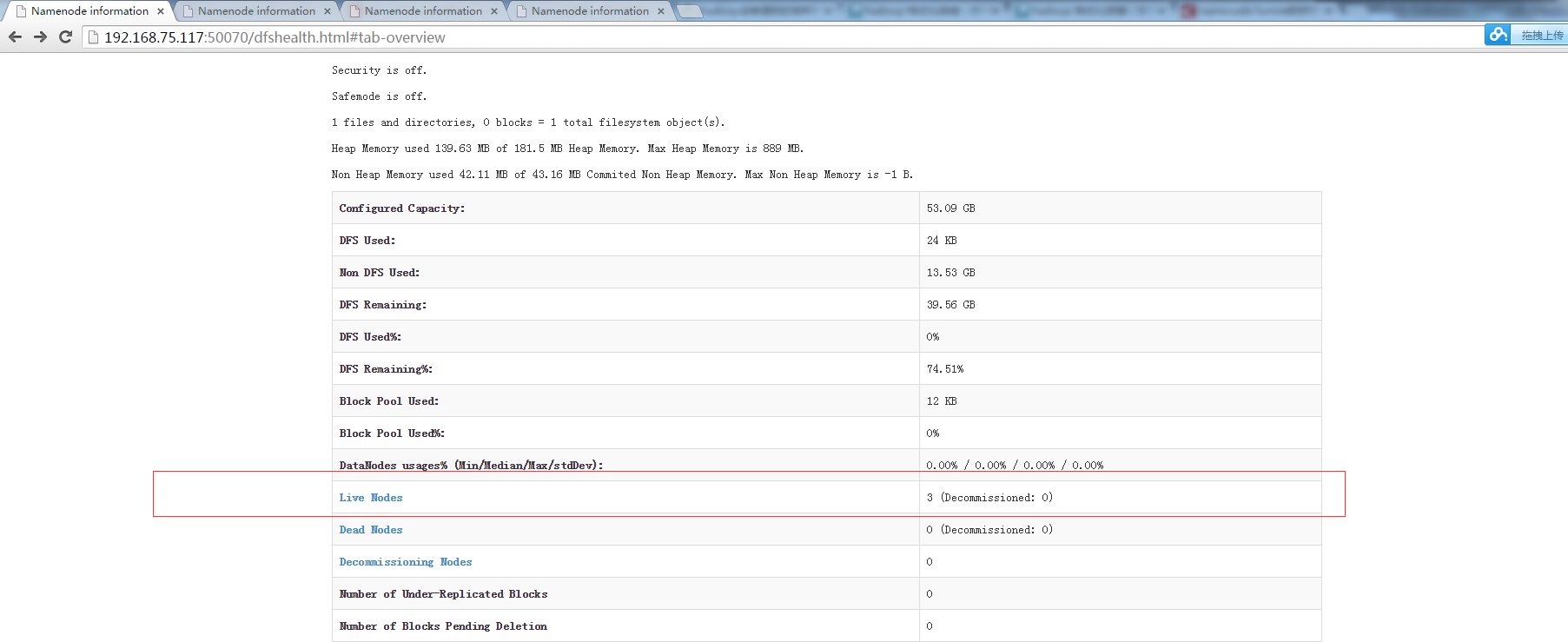

开了仨,就是之前预设好的 192.168.1.118,192.168.1.119 和 192.168.1.120

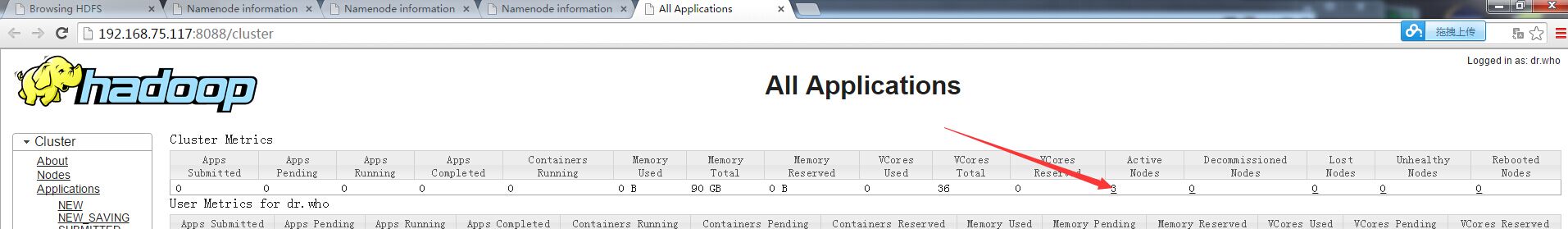

启动 yarn

[linuxidc@linuxidc117 hadoop-2.6.0]$ sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/linuxidc/hadoop-2.6.0/logs/yarn-linuxidc-resourcemanager-linuxidc117.out

linuxidc118: nodemanager running as process 14812. Stop it first.

linuxidc120: nodemanager running as process 14025. Stop it first.

linuxidc119: nodemanager running as process 17590. Stop it first.

[linuxidc@linuxidc117 hadoop-2.6.0]$ jps

NameNode

Jps

ResourceManager

也是可以看到有三个 datanode

最后总结一下吧 …… 自学大数据的话,有一个简单的部署就足够了,能够让你写好的程序放入 hdfs 中跑就可以了,这样的集群应该是在最后,或者需要的时候再去详细的做研究,抓紧进入之后的阶段吧~~

Hadoop 如何修改 HDFS 文件存储块大小 http://www.linuxidc.com/Linux/2013-09/90100.htm

将本地文件拷到 HDFS 中 http://www.linuxidc.com/Linux/2013-05/83866.htm

从 HDFS 下载文件到本地 http://www.linuxidc.com/Linux/2012-11/74214.htm

将本地文件上传至 HDFS http://www.linuxidc.com/Linux/2012-11/74213.htm

HDFS 基本文件常用命令 http://www.linuxidc.com/Linux/2013-09/89658.htm

Hadoop 中 HDFS 和 MapReduce 节点基本简介 http://www.linuxidc.com/Linux/2013-09/89653.htm

《Hadoop 实战》中文版 + 英文文字版 + 源码【PDF】http://www.linuxidc.com/Linux/2012-10/71901.htm

Hadoop: The Definitive Guide【PDF 版】http://www.linuxidc.com/Linux/2012-01/51182.htm

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2015-08/122260.htm