共计 29819 个字符,预计需要花费 75 分钟才能阅读完成。

实验环境:

LVS 和 keepalived 介绍:

1.keepalived 的设计目标是构建高可用的 LVS 负载均衡群集,可以调用 ipvsadm 工具来创建虚拟服务器,管理服务器池,而不仅仅用来做双机热备。

使用 keepalived 构建 LVS 群集更加简便易用,主要优势体现在:

对 LVS 负载调度器实现热备切换,提高可用性;

对服务器池中的节点进行健康检查,自动移除失效节点,恢复后再重新加入。

2. 在基于 LVS+Keepalived 实现的 LVS 群集结构中,至少包括两台热备的负载调度器,两台以上的节点服务器,本例将以 DR 模式的 LVS 群集为基础,增加一台从负载调度器,使用 Keepalived 来实现主、从调度器的热备,从而构建建有负载均衡、高可用两种能力的 LVS 网站群集平台。

3. 通过 LVS+Keepalived 构建的 LVS 集群,LVS 负载均衡用户请求到后端 tomcat 服务器,Keepalived 的作用是检测 web 服务器的状态,如果有一台 web 服务器死机,或工作出现故障,Keepalived 将检测到,并将有故障的 web 服务器从系统中剔除,当 web 服务器工作正常后 Keepalived 自动将 web 服务器加入到服务器群中,这些工作全部自动完成,不需要人工干涉,需要人工做的只是修复故障的 web 服务器。

Keepalived 简介

什么是 Keepalived:keepalived 可以实现服务的高可用或热备,用来防止单点故障的问题;而 Keepalived 的核心 VRRP 协议,VRRP 协议主要实现了在路由器或三层交换机处的冗余;Keepalived 就是使用 VRRP 协议来实现高可用的;下面一起来看一下 Keepalived 的原理图:

Keepalived 启动后会有三个进程:

父进程:内存管理,子进程管理

子进程:VRRP 子进程

子进程:healthchecker 子进程

由上图可知:

两个子进程都被系统 WatchDog 看管,两个子进程各自复杂自己的事,healthchecker 子进程复杂检查各自服务器的健康程度,例如 HTTP,LVS 等,如果 healthchecker 子进程检查到 MASTER 上服务不可用了,就会通知本机上的 VRRP 子进程,让它删除通告,并且去掉虚拟 IP,转换为 BACKUP 状态;

实验架构图:

1 <BR>

实验过程:

HA 高可用集群构建前提:

1.proxy 和 proxy2 节点时间必须同步;

建议使用 ntp 协议进行;

参考博客:http://sohudrgon.blog.51cto.com/3088108/1598314

2、节点之间必须要通过主机名互相通信;

建议使用 hosts 文件;

通信中使用的名字必须与其节点为上“uname -n”命令展示出的名字保持一致;

[root@proxy ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.1 server.magelinux.com server

172.16.31.52 proxy.stu31.com proxy

172.16.31.53 proxy2.stu31.com proxy2

172.16.31.50 tom1.stu31.com tom1

172.16.31.51 tom2.stu31.com tom2

3、节点之间彼此 root 用户能基于 ssh 密钥方式进行通信;

节点 proxy:

# ssh-keygen -t rsa -P “”

# ssh-copy-id -i .ssh/id_rsa.pub proxy2

节点 proxy2:

# ssh-keygen -t rsa -P “”

# ssh-copy-id -i .ssh/id_rsa.pub proxy

测试 ssh 无密钥通信:

123 [root@proxy ~]# date ; ssh proxy2 date

Sat Jan 17 15:29:26 CST 2015

Sat Jan 17 15:29:26 CST 2015

一. 配置安装 LVS+keepalived

1. 在 Master 与 Backup 服务器上分别安装 Ipvsadm、Keepalived 软件包、这里使用的是 yum 安装方式

# yum install -y keepalived ipvsadm

注释:这里安装 Ipvsadm 工具主要是为了查看 lvs 规则使用,不安装 ipvsadm 工具也能成功配置规则,但不方便;

2. 修改 keepalived 配置文件

keepalived 的文件路径 /etc/keepalived/keepalived.conf

修改 Master 节点 proxy 的主配置文件

[root@proxy ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {#设置报警通知邮件地址,可以设置多个

root@localhost

}

notification_email_from admin@stu31.com #设置邮件的发送地址

smtp_server 127.0.0.1 #设置 smtp server 的地址, 该地址必须是存在的

smtp_connect_timeout 30 #设置连接 smtp server 的超时时间

router_id LVS_DEVEL #运行 Keepalived 服务器的标识,发邮件时显示在邮件标题中的信息

}

vrrp_instance VI_1 {#定义 VRRP 实例, 实例名自定义

state MASTER #指定 Keepalived 的角色,MASTER 为主服务器,BACKUP 为备用服务器

interface eth0 #指定 HA 监测的接口

virtual_router_id 51 #虚拟路由标识,这个标识是一个数字(1-255),在一个 VRRP 实例中主备服务器 ID 必须一样

priority 100 #优先级,数字越大优先级越高,在一个实例中主服务器优先级要高于备服务器

advert_int 1 #设置主备之间同步检查的时间间隔单位秒

authentication {#设置验证类型和密码

auth_type PASS #验证类型有两种{PASS|HA}

auth_pass Oracle #设置验证密码,在一个实例中主备密码保持一样

}

virtual_ipaddress {#定义虚拟 IP 地址, 可以有多个,每行一个

172.16.31.188

}

}

virtual_server 172.16.31.188 {#设置虚拟服务器,需要指定虚拟 IP 与服务端口, 用空格分隔

delay_loop 6 #设置健康状态检查时间,单位为秒

lb_algo rr #设置负载高度算法,rr 为轮询

lb_kind DR #设置 LVS 实现负载均衡的机制,可以为{NAT|TUN|DR} 三种

nat_mask 255.255.0.0 #设置掩码

persistence_timeout 50 #会话保持时间,单位为秒; 这个选项对于动态网页是非常有用的,为集群系统中 session 共享提供了一个很好的解决方案

protocol TCP #指定转发协议类型可以设置 {TCP|UDP} 两种

real_server 172.16.31.50 80 {#后端服务器节点, 需要指定 Real_server 的 IP 与端口, 用空格分隔

weight 1 #配置服务节点的权重, 数字越大,权重越高

HTTP_GET {#设置检测 Realserver 的方式为 http 协议

url {

path /

status_code 200 #设定返回状态码为 200 表示 Realserver 是存活的

}

connect_timeout 3 #设置响应超时时间

nb_get_retry 3 #设置超时重试次数

delay_before_retry 3 #设置超时后重试间隔

}

}

real_server 172.16.31.51 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

3. 将 Master 服务器 proxy 节点上的主配置文件拷贝到 Backup 服务器 proxy2 节点稍作修改

# scp /etc/keepalived/keepalived.conf proxy2:/etc/keepalived/

###### 修改如下两项

[root@proxy2 ~]# vim /etc/keepalived/keepalived.conf

state BACKUP

priority 99

4. 启动两台服务器上的 Keepalived 服务并设置为开机自启动

# chkconfig –add keepalived ; ssh proxy2 “chkconfig –add keepalived”

# chkconfig keepalived on ; ssh proxy2 “chkconfig keepalived on”

# chkconfig –list keepalived; ssh proxy2 “chkconfig –list keepalived”

keepalived 0:off 1:off 2:on 3:on 4:on 5:on 6:off

keepalived 0:off 1:off 2:on 3:on 4:on 5:on 6:off

启动服务:

# service keepalived start; ssh proxy2 “service keepalived start”

5. 观察各节点的服务启动后的日志:

MASTER 节点的日志;

[root@proxy keepalived]# tail -f /var/log/messages

Jan 17 15:57:42 proxy Keepalived[15019]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 17 15:57:42 proxy Keepalived[15021]: Starting Healthcheck child process, pid=15024

Jan 17 15:57:42 proxy Keepalived[15021]: Starting VRRP child process, pid=15025

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Netlink reflector reports IP 172.16.31.52 added

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Registering gratuitous ARP shared channel

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Netlink reflector reports IP 172.16.31.52 added

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Configuration is using : 63202 Bytes

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Configuration is using : 16989 Bytes

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Activating healthchecker for service [172.16.31.50]:8080

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Activating healthchecker for service [172.16.31.51]:8080

Jan 17 15:57:43 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 17 15:57:44 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 17 15:57:44 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 17 15:57:44 proxy Keepalived_healthcheckers[15024]: Netlink reflector reports IP 172.16.31.188 added

Jan 17 15:57:44 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

BACKUP 节点的日志:

[root@proxy2 keepalived]# tail -f /var/log/messages

Jan 17 15:57:42 proxy2 Keepalived[15005]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 17 15:57:42 proxy2 Keepalived[15007]: Starting Healthcheck child process, pid=15009

Jan 17 15:57:42 proxy2 Keepalived[15007]: Starting VRRP child process, pid=15010

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Netlink reflector reports IP 172.16.31.53 added

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Netlink reflector reports IP 172.16.31.53 added

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Registering gratuitous ARP shared channel

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Configuration is using : 16991 Bytes

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Configuration is using : 63204 Bytes

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Activating healthchecker for service [172.16.31.50]:8080

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Activating healthchecker for service [172.16.31.51]:8080

6. 开启 Master 节点与 Backup 节点服务器的路由转发功能

#sed -i ‘s/net.ipv4.ip_forward = 0/net.ipv4.ip_forward = 1/g’ /etc/sysctl.conf

Linux 服务器 LB 群集之 LVS-NAT http://www.linuxidc.com/Linux/2013-05/84774.htm

Linux 下群集服务之 LB 集群 -LVS-NAT 模式 http://www.linuxidc.com/Linux/2012-05/59839.htm

LVS-NAT+ipvsadm 实现 RHEL 5.7 上的服务集群 http://www.linuxidc.com/Linux/2012-04/58936.htm

Linux 虚拟服务器 LVS-NAT 和 LVS-DR 模型的实现 http://www.linuxidc.com/Linux/2011-09/42934.htm

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2015-01/112560p2.htm

二. 安装配置后端的 tomcat 服务器节点

1. 配置后端 tomcat 服务器节点;

具体配置参考我的前篇博客,都是连续性的实验;

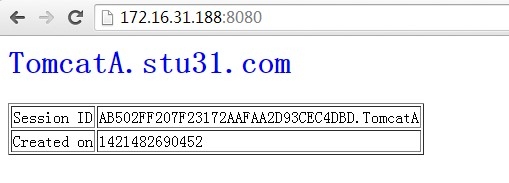

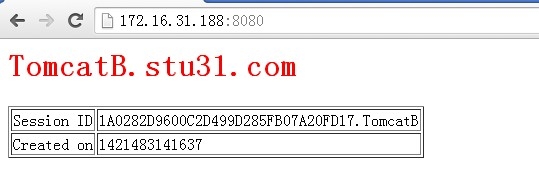

配置完成后,访问测试如图:

tom1 节点:

tom2 节点:

实现了负载均衡访问;

2. 为两台 Realserver 提供 Sysv 格式的脚本来自动修改内核参数与虚拟 IP 并运行脚本

#vim rs.sh

. /etc/rc.d/init.d/functions

VIP=172.16.31.188

host=`/bin/hostname`

case “$1” in

start)

# Start LVS-DR real server on this machine.

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:0

;;

stop)

# Stop LVS-DR real server loopback device(s).

/sbin/ifconfig lo:0 down

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

;;

status)

# Status of LVS-DR real server.

islothere=`/sbin/ifconfig lo:0 | grep $VIP`

isrothere=`netstat -rn | grep “lo:0” | grep $VIP`

if [! “$islothere” -o ! “isrothere”];then

# Either the route or the lo:0 device

# not found.

echo “LVS-DR real server Stopped.”

else

echo “LVS-DR real server Running.”

fi

;;

*)

# Invalid entry.

echo “$0: Usage: $0 {start|status|stop}”

exit 1

;;

esac

注释:脚本中的 VIP 定义的是虚拟 IP 地址

3. 脚本两个节点都存放一份;然后执行此脚本:

#sh rs.sh start

4. 查看设置后的状态:

12 # sh rs.sh status

LVS-DR real server Running.

查看节点的 VIP 地址设置完成与否:

tom1 节点;

[root@tom1 ~]# ip addr show lo

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 172.16.31.188/32 brd 172.16.31.188 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

tom2 节点:

[root@tom2 ~]# ip addr show lo

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet 172.16.31.188/32 brd 172.16.31.188 scope global lo:0

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

5. 查看 MASTER 节点 proxy 的 ipvs 规则:

1234567 [root@proxy keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.188:0 rr persistent 50

-> 172.16.31.50:8080 Route 1 0 0

-> 172.16.31.51:8080 Route 1 0 0

从规则中可以看出虚拟 IP 与 Port 及调度算法为 rr;其中有两个 Realserver

三. 访问测试及故障模拟 ces

1. 访问测试:

由上可知,使用的是 rr 调度算法,在访问测试时可能需要多访问几次或换个浏览器来测试访问

2. 模拟 Master 服务器出现故障,将 Master 主机上的 Keepalived 服务停止,查看 Backup 服务器是否接管所有服务

[root@proxy keepalived]# service keepalived stop

Stopping keepalived: [OK]

3. 观察节点切换后的系统日志:

MASTER 节点的日志:

[root@proxy keepalived]# tail -f /var/log/messages

Jan 17 16:20:40 proxy Keepalived[15021]: Stopping Keepalived v1.2.13 (10/15,2014)

Jan 17 16:20:40 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) sending 0 priority

Jan 17 16:20:40 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) removing protocol VIPs.

Jan 17 16:20:40 proxy Keepalived_healthcheckers[15024]: Netlink reflector reports IP 172.16.31.188 removed

Jan 17 16:20:40 proxy Keepalived_healthcheckers[15024]: Removing service [172.16.31.50]:8080 from VS [172.16.31.188]:0

Jan 17 16:20:40 proxy Keepalived_healthcheckers[15024]: Removing service [172.16.31.51]:8080 from VS [172.16.31.188]:0

Jan 17 16:20:40 proxy kernel: IPVS: __ip_vs_del_service: enter

BACKUP 节点的日志:

1234567 [root@proxy2 keepalived]# tail -f /var/log/messages

Jan 17 16:20:41 proxy2 Keepalived_vrrp[15010]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 17 16:20:42 proxy2 Keepalived_vrrp[15010]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 17 16:20:42 proxy2 Keepalived_vrrp[15010]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 17 16:20:42 proxy2 Keepalived_healthcheckers[15009]: Netlink reflector reports IP 172.16.31.188 added

Jan 17 16:20:42 proxy2 Keepalived_vrrp[15010]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 17 16:20:47 proxy2 Keepalived_vrrp[15010]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

4. 查看各节点的 VIP 地址状态及 IPVS 规则:

原 MASTER 节点:

[root@proxy keepalived]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:3b:23:60 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.52/16 brd 172.16.255.255 scope global eth0

inet6 fe80::a00:27ff:fe3b:2360/64 scope link

valid_lft forever preferred_lft forever

1234 [root@proxy keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

由上可见 Master 服务器上已删除虚拟 IP 与 LVS 规则

BACKUP 节点:

[root@proxy2 keepalived]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:6e:bd:28 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.53/16 brd 172.16.255.255 scope global eth0

inet 172.16.31.188/32 scope global eth0

inet6 fe80::a00:27ff:fe6e:bd28/64 scope link

valid_lft forever preferred_lft forever

由上可见,虚拟 IP 地址已成功在 Backup 服务器启动

1234567 [root@proxy2 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.188:0 rr persistent 50

-> 172.16.31.50:8080 Route 1 0 0

-> 172.16.31.51:8080 Route 1 0 0

LVS 的规则也已成功配置在 Backup 服务器上面

5. 再次访问测试服务器是否正常提供服务

6. 假如 Master 服务器修复好已重新上线,则虚拟 IP 地址与 LVS 规则会重新配置到 Master 服务器上而在 Backup 服务器上删除

[root@proxy keepalived]# service keepalived start

Starting keepalived: [OK]

观察启动日志:

MASTER 节点的日志:

[root@proxy keepalived]# tail -f /var/log/messages

Jan 17 16:34:50 proxy Keepalived_vrrp[15366]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 17 16:34:50 proxy Keepalived_vrrp[15366]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election

Jan 17 16:34:51 proxy Keepalived_vrrp[15366]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 17 16:34:51 proxy Keepalived_vrrp[15366]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 17 16:34:51 proxy Keepalived_vrrp[15366]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

Jan 17 16:34:51 proxy Keepalived_healthcheckers[15365]: Netlink reflector reports IP 172.16.31.188 added

Jan 17 16:34:56 proxy Keepalived_vrrp[15366]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

自动进入了 MASTER 状态;

BACKUP 节点的日志:

12345 [root@proxy2 keepalived]# tail -f /var/log/messages

Jan 17 16:34:50 proxy2 Keepalived_vrrp[15258]: VRRP_Instance(VI_1) Received higher prio advert

Jan 17 16:34:50 proxy2 Keepalived_vrrp[15258]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 17 16:34:50 proxy2 Keepalived_vrrp[15258]: VRRP_Instance(VI_1) removing protocol VIPs.

Jan 17 16:34:50 proxy2 Keepalived_healthcheckers[15257]: Netlink reflector reports IP 172.16.31.188 removed

自动进入 BACKUP 状态,移除 VIP;

查看各节点的 VIP 和 IPVS 规则:

MASTER 节点的的 VIP 生成成功,IPVS 规则生成:

[root@proxy keepalived]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:3b:23:60 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.52/16 brd 172.16.255.255 scope global eth0

inet 172.16.31.188/32 scope global eth0

inet6 fe80::a00:27ff:fe3b:2360/64 scope link

valid_lft forever preferred_lft forever

[root@proxy keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.188:0 rr persistent 50

-> 172.16.31.50:8080 Route 1 0 0

-> 172.16.31.51:8080 Route 1 0 0

BACKUP 节点自动降级,VIP 自动移除,但是 ipvs 规则还是存在的,这对我们是没有影响的,没有了 IP 地址只有规则也是不生效的

[root@proxy2 keepalived]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:6e:bd:28 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.53/16 brd 172.16.255.255 scope global eth0

inet6 fe80::a00:27ff:fe6e:bd28/64 scope link

valid_lft forever preferred_lft forever

[root@proxy2 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.188:0 rr persistent 50

-> 172.16.31.50:8080 Route 1 0 0

-> 172.16.31.51:8080 Route 1 0 0

7. 如果后端 Realserver 出现故障,则 LVS 规则会清除相应 Realserver 的规则

我们将后端的 tom1 节点的 tomcat 服务停止:

[root@tom1 ~]# catalina.sh stop

Using CATALINA_BASE: /usr/local/tomcat

Using CATALINA_HOME: /usr/local/tomcat

Using CATALINA_TMPDIR: /usr/local/tomcat/temp

Using JRE_HOME: /usr/Java/latest

Using CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jar

[root@tom1 ~]#

观察 LVS 节点的启动日志:

MASTER 节点:

出现连接错误,发送了邮件:

[root@proxy keepalived]# tail -f /var/log/messages

Jan 17 16:40:39 proxy Keepalived_healthcheckers[15365]: Error connecting server [172.16.31.50]:8080.

Jan 17 16:40:39 proxy Keepalived_healthcheckers[15365]: Removing service [172.16.31.50]:8080 from VS [172.16.31.188]:0

Jan 17 16:40:39 proxy Keepalived_healthcheckers[15365]: Remote SMTP server [127.0.0.1]:25 connected.

Jan 17 16:40:39 proxy Keepalived_healthcheckers[15365]: SMTP alert successfully sent.

BACKUP 节点:

出现连接错误,发送了邮件:

[root@proxy2 keepalived]# tail -f /var/log/messages

Jan 17 16:40:42 proxy2 Keepalived_healthcheckers[15257]: Error connecting server [172.16.31.50]:8080.

Jan 17 16:40:42 proxy2 Keepalived_healthcheckers[15257]: Removing service [172.16.31.50]:8080 from VS [172.16.31.188]:0

Jan 17 16:40:42 proxy2 Keepalived_healthcheckers[15257]: Remote SMTP server [127.0.0.1]:25 connected.

Jan 17 16:40:42 proxy2 Keepalived_healthcheckers[15257]: SMTP alert successfully sent.

在到 MASTER 节点查看 ipvs 规则:

1234567 [root@proxy keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.188:0 rr persistent 50

-> 172.16.31.51:8080 Route 1 0 0

You have new mail in /var/spool/mail/root

规则已经将故障的后端 tomcat 服务器移除,并且我们还收到了邮件;查看邮件:

[root@proxy keepalived]# mail

Heirloom Mail version 12.4 7/29/08. Type ? for help.

“/var/spool/mail/root”: 16 messages 12 new 14 unread

略 …..

N 12 admin@stu31.com Sat Jan 17 15:44 17/594 “[LVS_DEVEL] Realserver [172.16.31.51]:80 – DOWN”

N 13 admin@stu31.com Sat Jan 17 15:44 17/594 “[LVS_DEVEL] Realserver [172.16.31.50]:80 – DOWN”

N 14 admin@stu31.com Sat Jan 17 15:50 17/594 “[LVS_DEVEL] Realserver [172.16.31.51]:80 – DOWN”

N 15 admin@stu31.com Sat Jan 17 15:50 17/594 “[LVS_DEVEL] Realserver [172.16.31.50]:80 – DOWN”

N 16 admin@stu31.com Sat Jan 17 16:40 17/596 “[LVS_DEVEL] Realserver [172.16.31.50]:8080 – DOWN”

& 16

Message 16:

From admin@stu31.com Sat Jan 17 16:40:39 2015

Return-Path: <admin@stu31.com>

X-Original-To: root@localhost

Delivered-To: root@localhost.stu31.com

Date: Sat, 17 Jan 2015 08:40:39 +0000

From: admin@stu31.com

Subject: [LVS_DEVEL] Realserver [172.16.31.50]:8080 – DOWN

X-Mailer: Keepalived

To: root@localhost.stu31.com

Status: R

=> CHECK failed on service : connection error <=

& quit

Held 16 messages in /var/spool/mail/root

注:我在这里还测试了优先级相同时,LVS 的主备节点的选举情况,如果优先级都是 100 的话,我们停止 MASTER 节点的 keepalived 服务后,VIP 和 IPVS 规则自动转移到了 BACKUP 节点,但是我们将 MASTER 节点的 keepalived 服务启动后,VIP 还是在 BACKUP 节点上,ipvs 规则会在 MASTER 节点上生成;

所以我们在生产环境中一定要注意优先级的设置,主节点的优先级必须比备用节点的优先级高;

至此,LVS+Keepalived 实现高可用负载均衡集群已全部完成

CentOS 6.3 下 Haproxy+Keepalived+Apache 配置笔记 http://www.linuxidc.com/Linux/2013-06/85598.htm

Haproxy + KeepAlived 实现 WEB 群集 on CentOS 6 http://www.linuxidc.com/Linux/2012-03/55672.htm

Keepalived+Haproxy 配置高可用负载均衡 http://www.linuxidc.com/Linux/2012-03/56748.htm

Haproxy+Keepalived 构建高可用负载均衡 http://www.linuxidc.com/Linux/2012-03/55880.htm

CentOS 7 上配置 LVS + Keepalived + ipvsadm http://www.linuxidc.com/Linux/2014-11/109237.htm

Keepalived 高可用集群搭建 http://www.linuxidc.com/Linux/2014-09/106965.htm

Keepalived 的详细介绍:请点这里

Keepalived 的下载地址:请点这里

实验环境:

LVS 和 keepalived 介绍:

1.keepalived 的设计目标是构建高可用的 LVS 负载均衡群集,可以调用 ipvsadm 工具来创建虚拟服务器,管理服务器池,而不仅仅用来做双机热备。

使用 keepalived 构建 LVS 群集更加简便易用,主要优势体现在:

对 LVS 负载调度器实现热备切换,提高可用性;

对服务器池中的节点进行健康检查,自动移除失效节点,恢复后再重新加入。

2. 在基于 LVS+Keepalived 实现的 LVS 群集结构中,至少包括两台热备的负载调度器,两台以上的节点服务器,本例将以 DR 模式的 LVS 群集为基础,增加一台从负载调度器,使用 Keepalived 来实现主、从调度器的热备,从而构建建有负载均衡、高可用两种能力的 LVS 网站群集平台。

3. 通过 LVS+Keepalived 构建的 LVS 集群,LVS 负载均衡用户请求到后端 tomcat 服务器,Keepalived 的作用是检测 web 服务器的状态,如果有一台 web 服务器死机,或工作出现故障,Keepalived 将检测到,并将有故障的 web 服务器从系统中剔除,当 web 服务器工作正常后 Keepalived 自动将 web 服务器加入到服务器群中,这些工作全部自动完成,不需要人工干涉,需要人工做的只是修复故障的 web 服务器。

Keepalived 简介

什么是 Keepalived:keepalived 可以实现服务的高可用或热备,用来防止单点故障的问题;而 Keepalived 的核心 VRRP 协议,VRRP 协议主要实现了在路由器或三层交换机处的冗余;Keepalived 就是使用 VRRP 协议来实现高可用的;下面一起来看一下 Keepalived 的原理图:

Keepalived 启动后会有三个进程:

父进程:内存管理,子进程管理

子进程:VRRP 子进程

子进程:healthchecker 子进程

由上图可知:

两个子进程都被系统 WatchDog 看管,两个子进程各自复杂自己的事,healthchecker 子进程复杂检查各自服务器的健康程度,例如 HTTP,LVS 等,如果 healthchecker 子进程检查到 MASTER 上服务不可用了,就会通知本机上的 VRRP 子进程,让它删除通告,并且去掉虚拟 IP,转换为 BACKUP 状态;

实验架构图:

1 <BR>

实验过程:

HA 高可用集群构建前提:

1.proxy 和 proxy2 节点时间必须同步;

建议使用 ntp 协议进行;

参考博客:http://sohudrgon.blog.51cto.com/3088108/1598314

2、节点之间必须要通过主机名互相通信;

建议使用 hosts 文件;

通信中使用的名字必须与其节点为上“uname -n”命令展示出的名字保持一致;

[root@proxy ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.1 server.magelinux.com server

172.16.31.52 proxy.stu31.com proxy

172.16.31.53 proxy2.stu31.com proxy2

172.16.31.50 tom1.stu31.com tom1

172.16.31.51 tom2.stu31.com tom2

3、节点之间彼此 root 用户能基于 ssh 密钥方式进行通信;

节点 proxy:

# ssh-keygen -t rsa -P “”

# ssh-copy-id -i .ssh/id_rsa.pub proxy2

节点 proxy2:

# ssh-keygen -t rsa -P “”

# ssh-copy-id -i .ssh/id_rsa.pub proxy

测试 ssh 无密钥通信:

123 [root@proxy ~]# date ; ssh proxy2 date

Sat Jan 17 15:29:26 CST 2015

Sat Jan 17 15:29:26 CST 2015

一. 配置安装 LVS+keepalived

1. 在 Master 与 Backup 服务器上分别安装 Ipvsadm、Keepalived 软件包、这里使用的是 yum 安装方式

# yum install -y keepalived ipvsadm

注释:这里安装 Ipvsadm 工具主要是为了查看 lvs 规则使用,不安装 ipvsadm 工具也能成功配置规则,但不方便;

2. 修改 keepalived 配置文件

keepalived 的文件路径 /etc/keepalived/keepalived.conf

修改 Master 节点 proxy 的主配置文件

[root@proxy ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {#设置报警通知邮件地址,可以设置多个

root@localhost

}

notification_email_from admin@stu31.com #设置邮件的发送地址

smtp_server 127.0.0.1 #设置 smtp server 的地址, 该地址必须是存在的

smtp_connect_timeout 30 #设置连接 smtp server 的超时时间

router_id LVS_DEVEL #运行 Keepalived 服务器的标识,发邮件时显示在邮件标题中的信息

}

vrrp_instance VI_1 {#定义 VRRP 实例, 实例名自定义

state MASTER #指定 Keepalived 的角色,MASTER 为主服务器,BACKUP 为备用服务器

interface eth0 #指定 HA 监测的接口

virtual_router_id 51 #虚拟路由标识,这个标识是一个数字(1-255),在一个 VRRP 实例中主备服务器 ID 必须一样

priority 100 #优先级,数字越大优先级越高,在一个实例中主服务器优先级要高于备服务器

advert_int 1 #设置主备之间同步检查的时间间隔单位秒

authentication {#设置验证类型和密码

auth_type PASS #验证类型有两种{PASS|HA}

auth_pass Oracle #设置验证密码,在一个实例中主备密码保持一样

}

virtual_ipaddress {#定义虚拟 IP 地址, 可以有多个,每行一个

172.16.31.188

}

}

virtual_server 172.16.31.188 {#设置虚拟服务器,需要指定虚拟 IP 与服务端口, 用空格分隔

delay_loop 6 #设置健康状态检查时间,单位为秒

lb_algo rr #设置负载高度算法,rr 为轮询

lb_kind DR #设置 LVS 实现负载均衡的机制,可以为{NAT|TUN|DR} 三种

nat_mask 255.255.0.0 #设置掩码

persistence_timeout 50 #会话保持时间,单位为秒; 这个选项对于动态网页是非常有用的,为集群系统中 session 共享提供了一个很好的解决方案

protocol TCP #指定转发协议类型可以设置 {TCP|UDP} 两种

real_server 172.16.31.50 80 {#后端服务器节点, 需要指定 Real_server 的 IP 与端口, 用空格分隔

weight 1 #配置服务节点的权重, 数字越大,权重越高

HTTP_GET {#设置检测 Realserver 的方式为 http 协议

url {

path /

status_code 200 #设定返回状态码为 200 表示 Realserver 是存活的

}

connect_timeout 3 #设置响应超时时间

nb_get_retry 3 #设置超时重试次数

delay_before_retry 3 #设置超时后重试间隔

}

}

real_server 172.16.31.51 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

3. 将 Master 服务器 proxy 节点上的主配置文件拷贝到 Backup 服务器 proxy2 节点稍作修改

# scp /etc/keepalived/keepalived.conf proxy2:/etc/keepalived/

###### 修改如下两项

[root@proxy2 ~]# vim /etc/keepalived/keepalived.conf

state BACKUP

priority 99

4. 启动两台服务器上的 Keepalived 服务并设置为开机自启动

# chkconfig –add keepalived ; ssh proxy2 “chkconfig –add keepalived”

# chkconfig keepalived on ; ssh proxy2 “chkconfig keepalived on”

# chkconfig –list keepalived; ssh proxy2 “chkconfig –list keepalived”

keepalived 0:off 1:off 2:on 3:on 4:on 5:on 6:off

keepalived 0:off 1:off 2:on 3:on 4:on 5:on 6:off

启动服务:

# service keepalived start; ssh proxy2 “service keepalived start”

5. 观察各节点的服务启动后的日志:

MASTER 节点的日志;

[root@proxy keepalived]# tail -f /var/log/messages

Jan 17 15:57:42 proxy Keepalived[15019]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 17 15:57:42 proxy Keepalived[15021]: Starting Healthcheck child process, pid=15024

Jan 17 15:57:42 proxy Keepalived[15021]: Starting VRRP child process, pid=15025

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Netlink reflector reports IP 172.16.31.52 added

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Registering gratuitous ARP shared channel

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Netlink reflector reports IP 172.16.31.52 added

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Netlink reflector reports IP fe80::a00:27ff:fe3b:2360 added

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Configuration is using : 63202 Bytes

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Configuration is using : 16989 Bytes

Jan 17 15:57:42 proxy Keepalived_vrrp[15025]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Activating healthchecker for service [172.16.31.50]:8080

Jan 17 15:57:42 proxy Keepalived_healthcheckers[15024]: Activating healthchecker for service [172.16.31.51]:8080

Jan 17 15:57:43 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) Transition to MASTER STATE

Jan 17 15:57:44 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) Entering MASTER STATE

Jan 17 15:57:44 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) setting protocol VIPs.

Jan 17 15:57:44 proxy Keepalived_healthcheckers[15024]: Netlink reflector reports IP 172.16.31.188 added

Jan 17 15:57:44 proxy Keepalived_vrrp[15025]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 172.16.31.188

BACKUP 节点的日志:

[root@proxy2 keepalived]# tail -f /var/log/messages

Jan 17 15:57:42 proxy2 Keepalived[15005]: Starting Keepalived v1.2.13 (10/15,2014)

Jan 17 15:57:42 proxy2 Keepalived[15007]: Starting Healthcheck child process, pid=15009

Jan 17 15:57:42 proxy2 Keepalived[15007]: Starting VRRP child process, pid=15010

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Netlink reflector reports IP 172.16.31.53 added

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Netlink reflector reports IP 172.16.31.53 added

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Registering gratuitous ARP shared channel

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Netlink reflector reports IP fe80::a00:27ff:fe6e:bd28 added

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Registering Kernel netlink reflector

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Registering Kernel netlink command channel

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Opening file ‘/etc/keepalived/keepalived.conf’.

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Configuration is using : 16991 Bytes

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Configuration is using : 63204 Bytes

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: VRRP_Instance(VI_1) Entering BACKUP STATE

Jan 17 15:57:42 proxy2 Keepalived_vrrp[15010]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Using LinkWatch kernel netlink reflector…

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Activating healthchecker for service [172.16.31.50]:8080

Jan 17 15:57:42 proxy2 Keepalived_healthcheckers[15009]: Activating healthchecker for service [172.16.31.51]:8080

6. 开启 Master 节点与 Backup 节点服务器的路由转发功能

#sed -i ‘s/net.ipv4.ip_forward = 0/net.ipv4.ip_forward = 1/g’ /etc/sysctl.conf

Linux 服务器 LB 群集之 LVS-NAT http://www.linuxidc.com/Linux/2013-05/84774.htm

Linux 下群集服务之 LB 集群 -LVS-NAT 模式 http://www.linuxidc.com/Linux/2012-05/59839.htm

LVS-NAT+ipvsadm 实现 RHEL 5.7 上的服务集群 http://www.linuxidc.com/Linux/2012-04/58936.htm

Linux 虚拟服务器 LVS-NAT 和 LVS-DR 模型的实现 http://www.linuxidc.com/Linux/2011-09/42934.htm

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2015-01/112560p2.htm